ESTUARY FLOW

Estuary Flow is the only platform purpose-built for real-time ETL and ELT data pipelines. Batch-load for analytics, and stream for ops and AI - all set up in minutes, with millisecond latency.

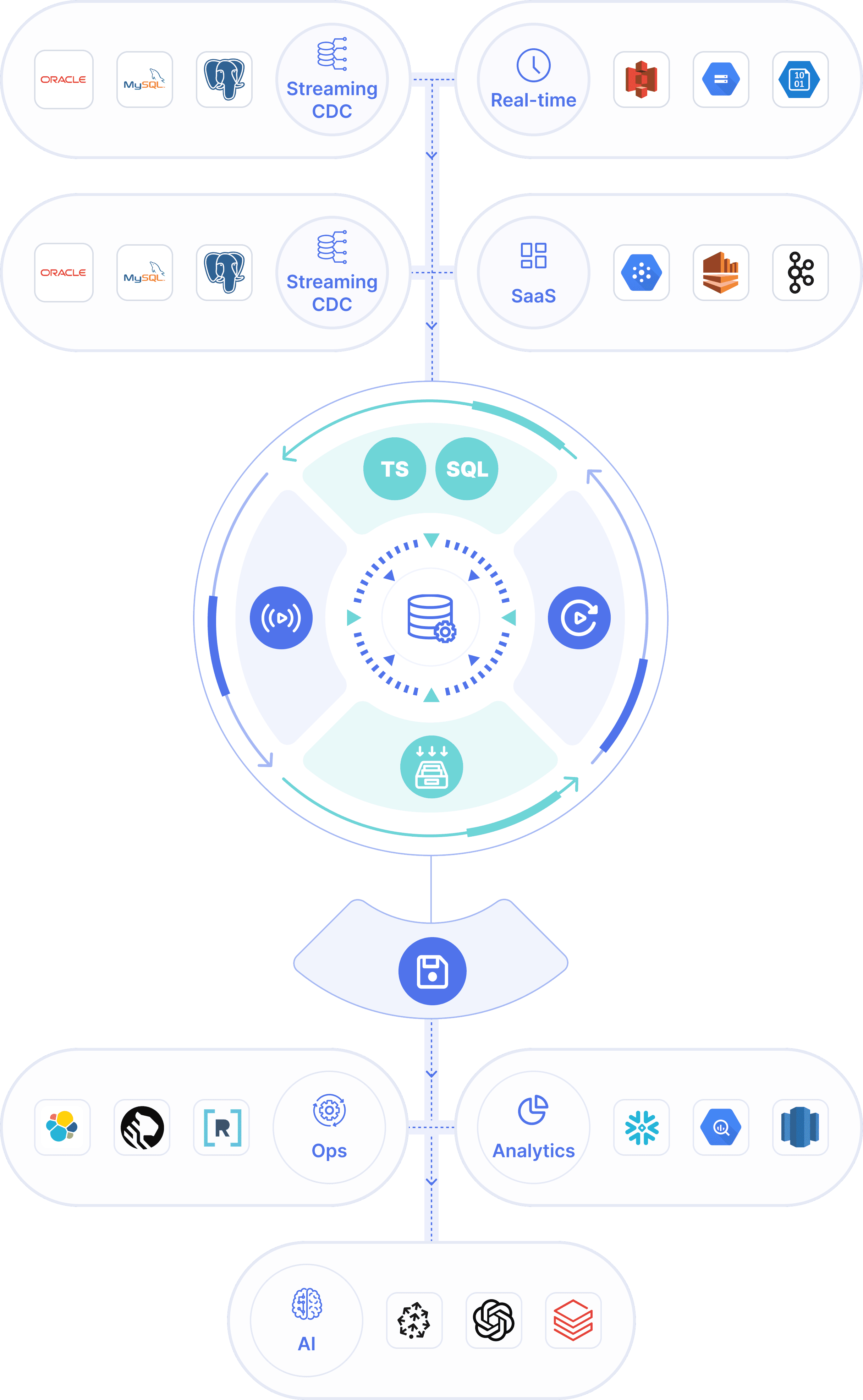

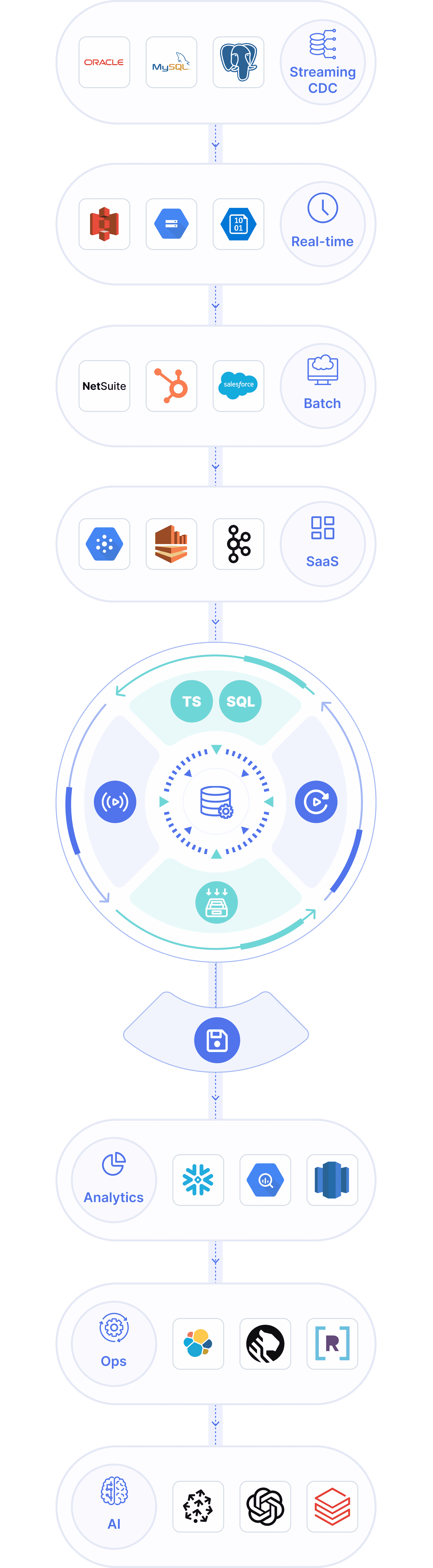

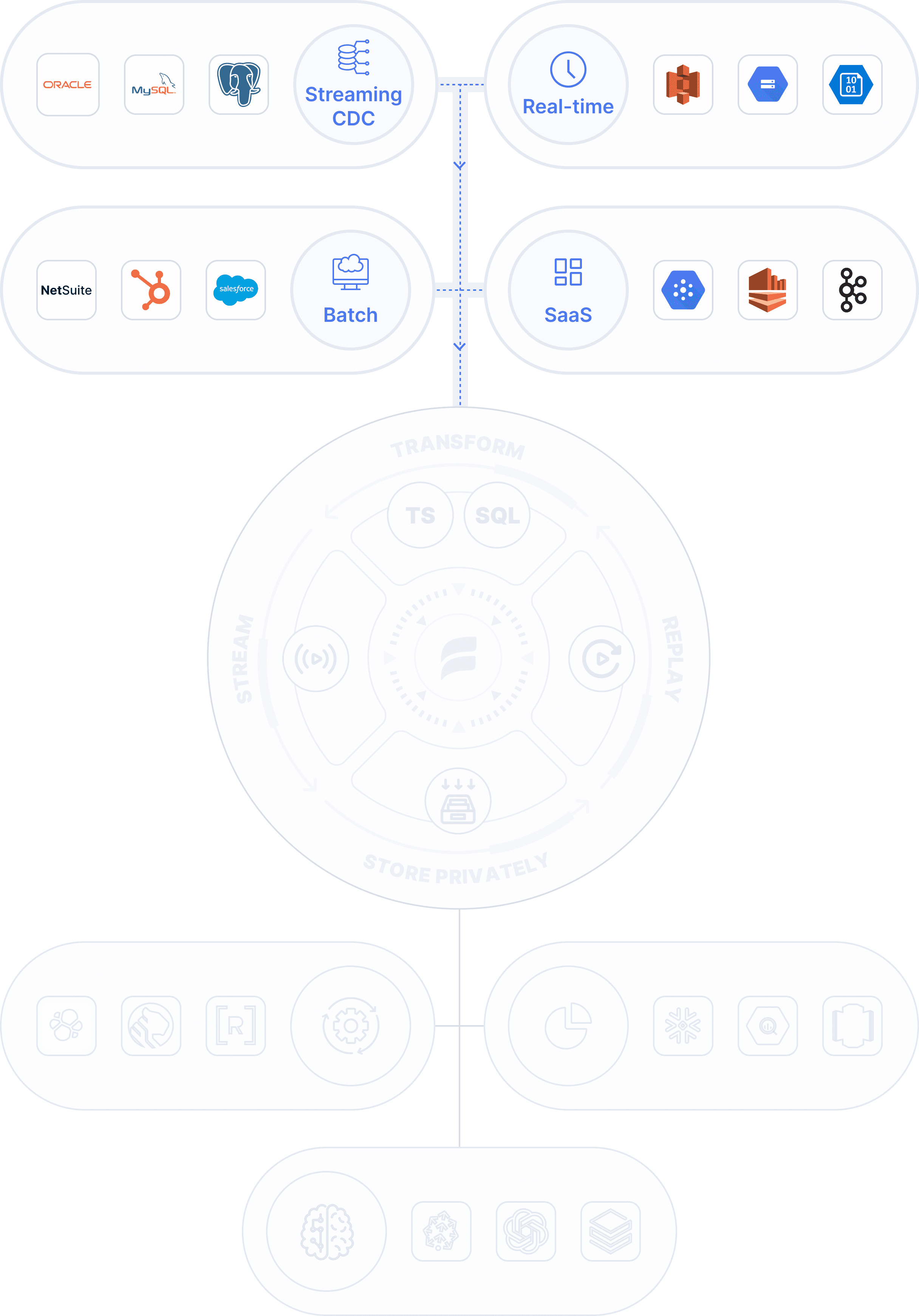

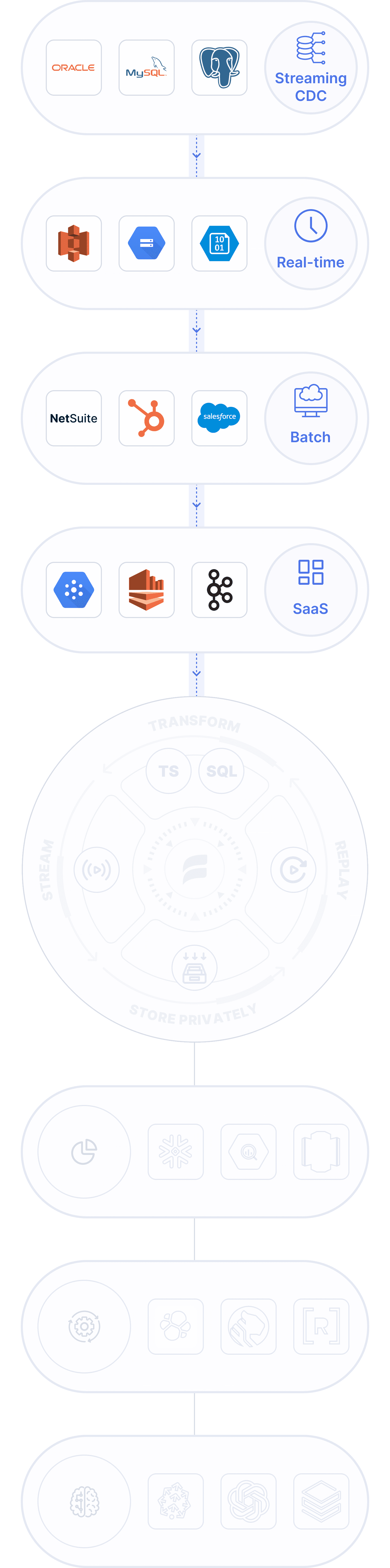

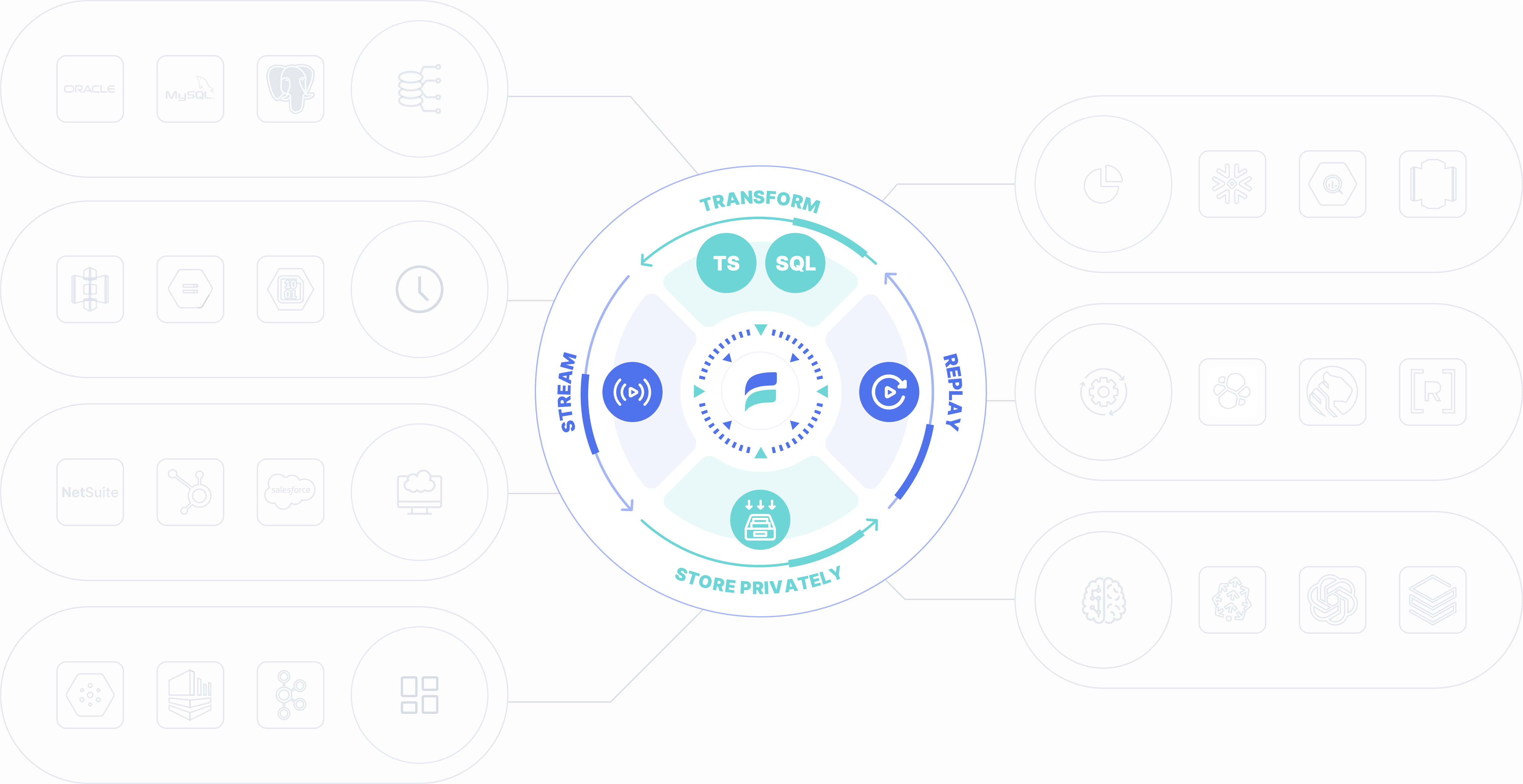

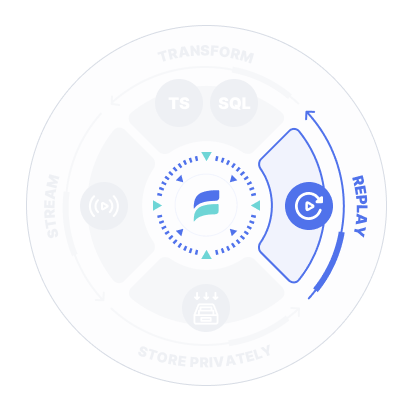

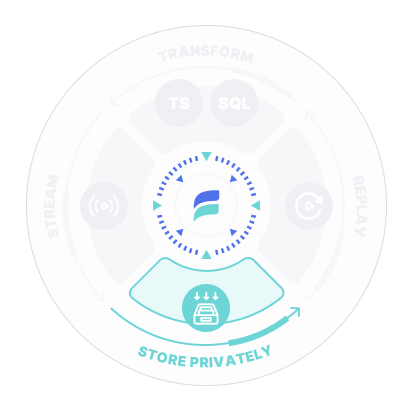

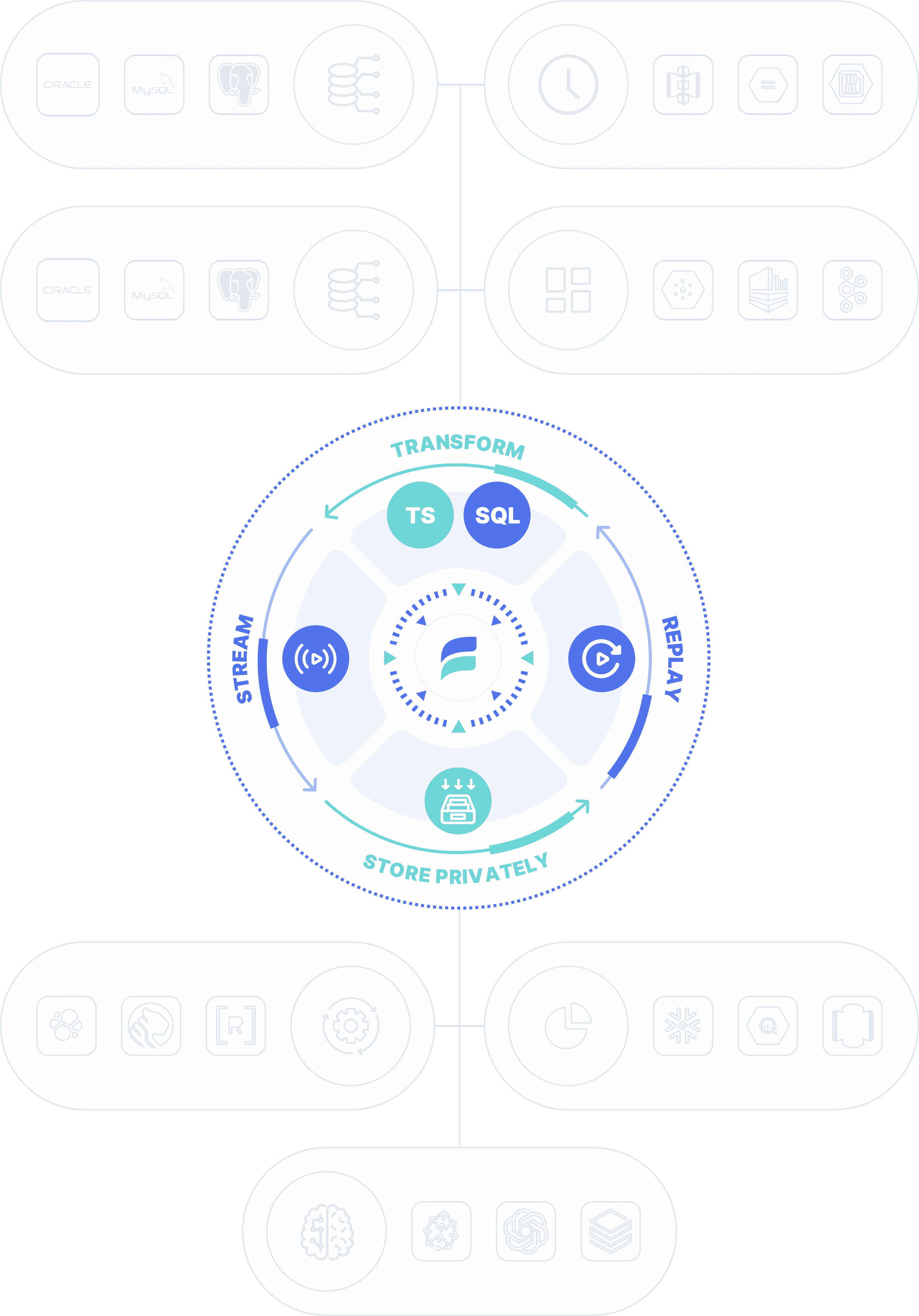

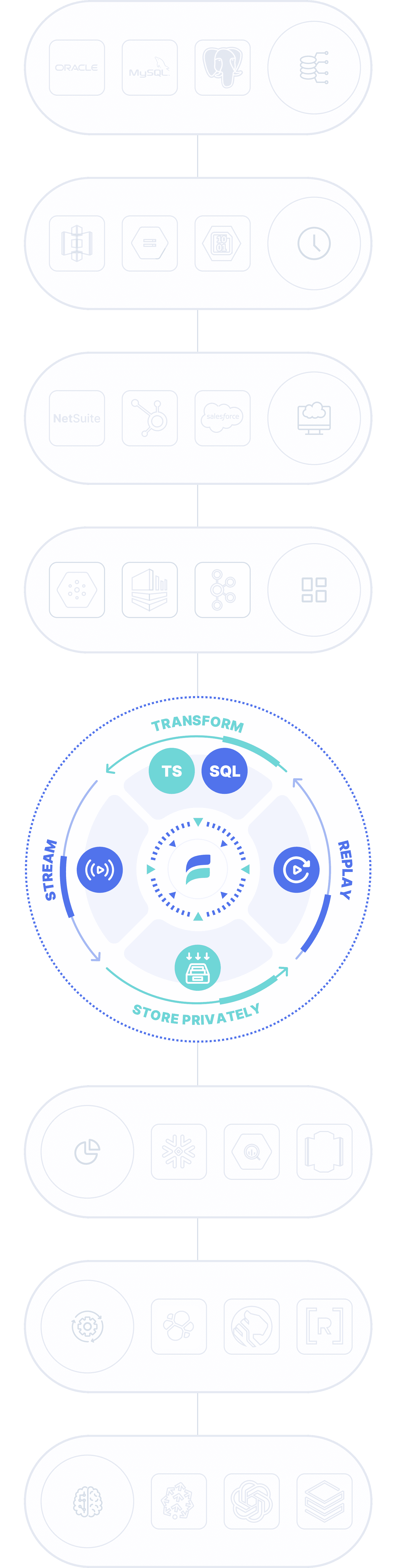

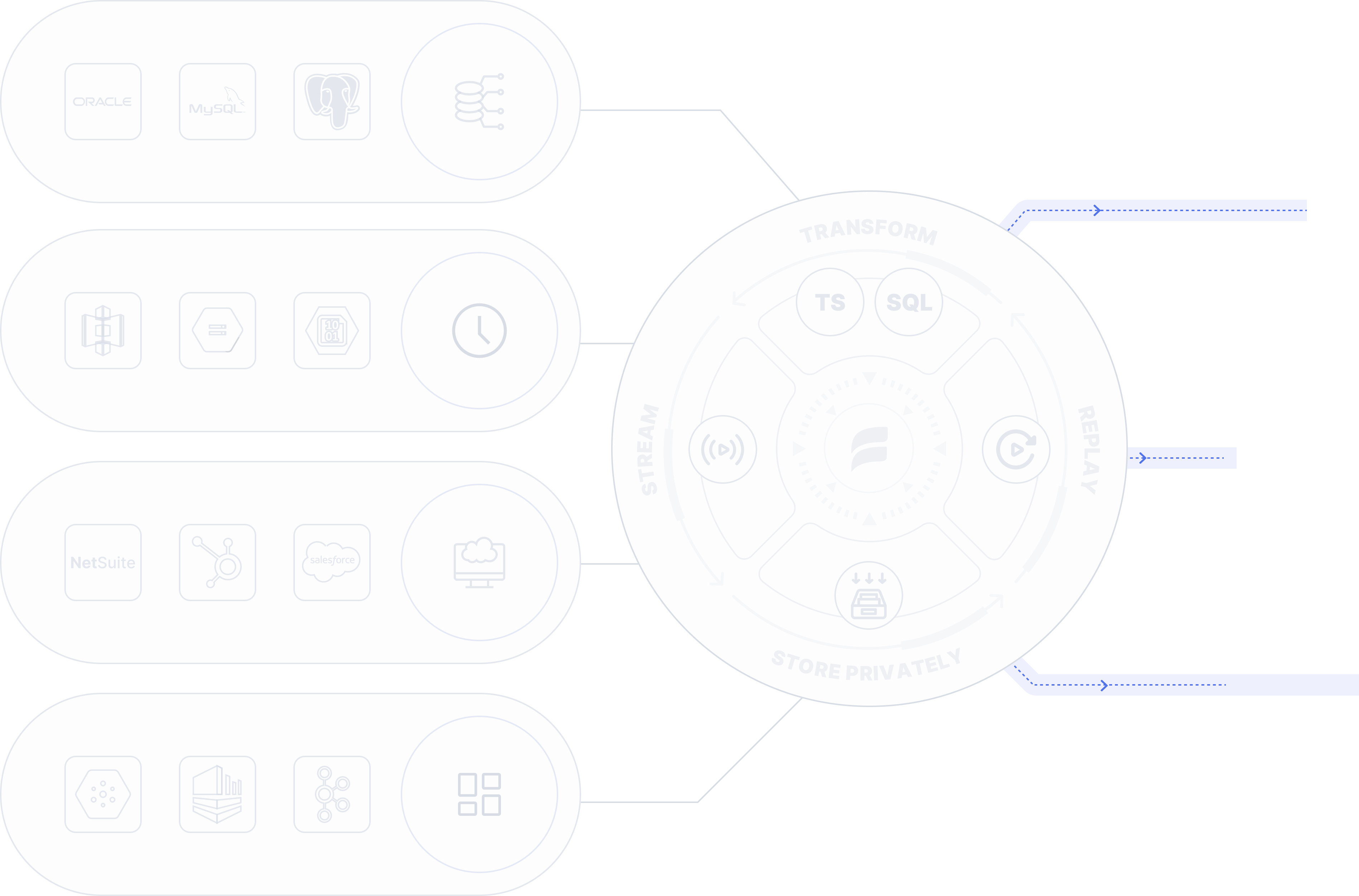

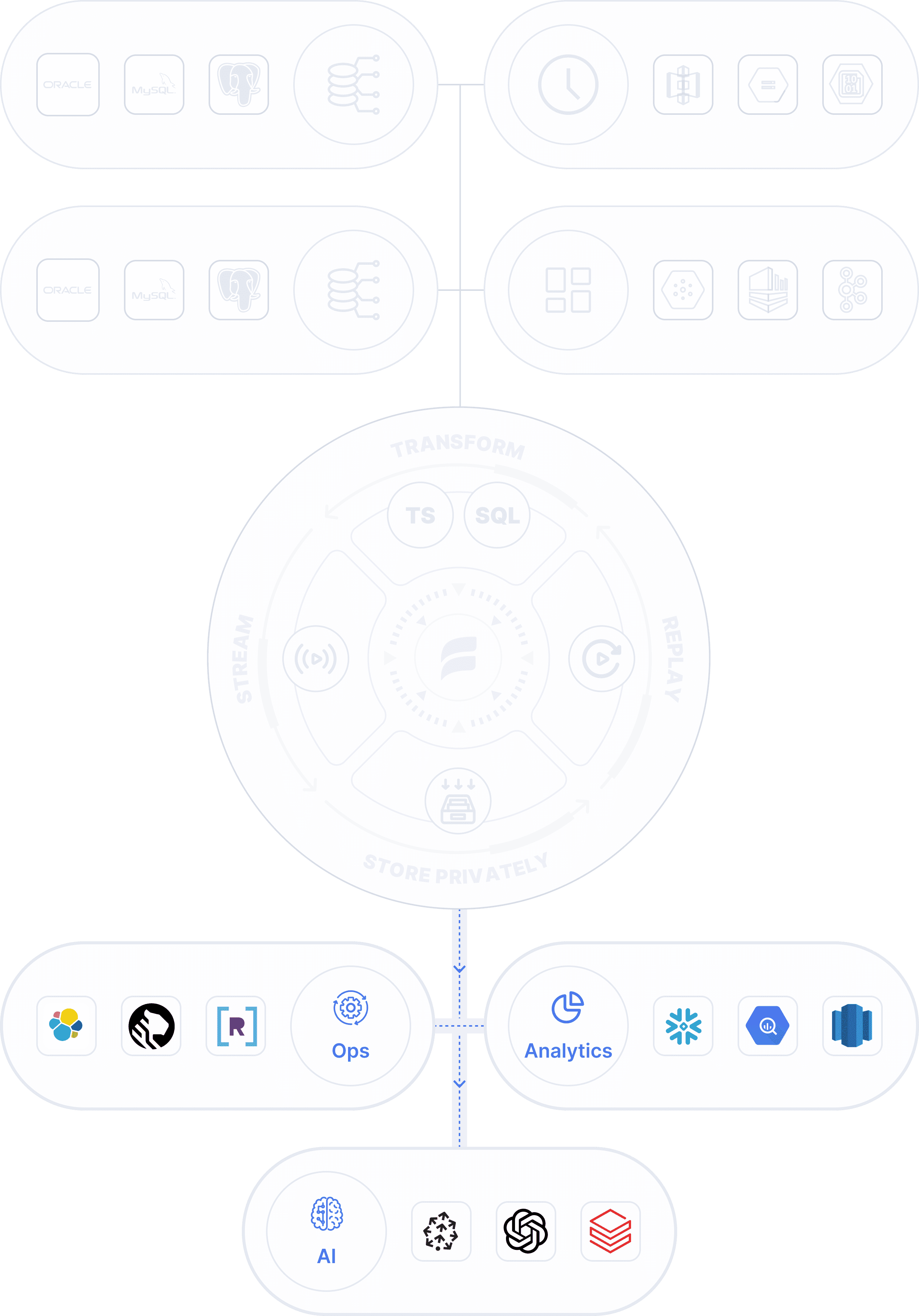

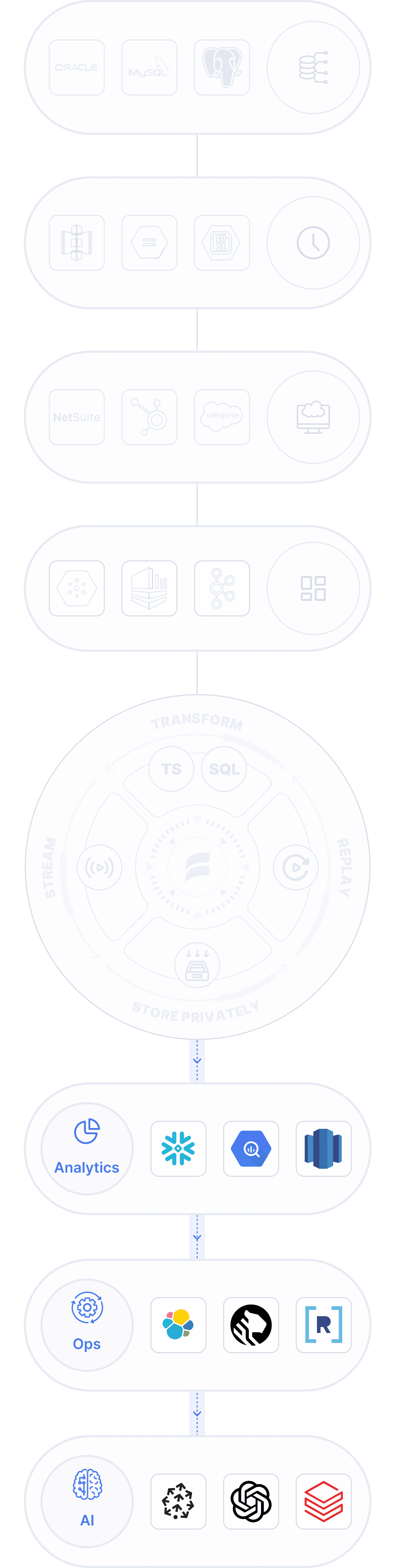

HOW IT WORKS

KEY FEATURES

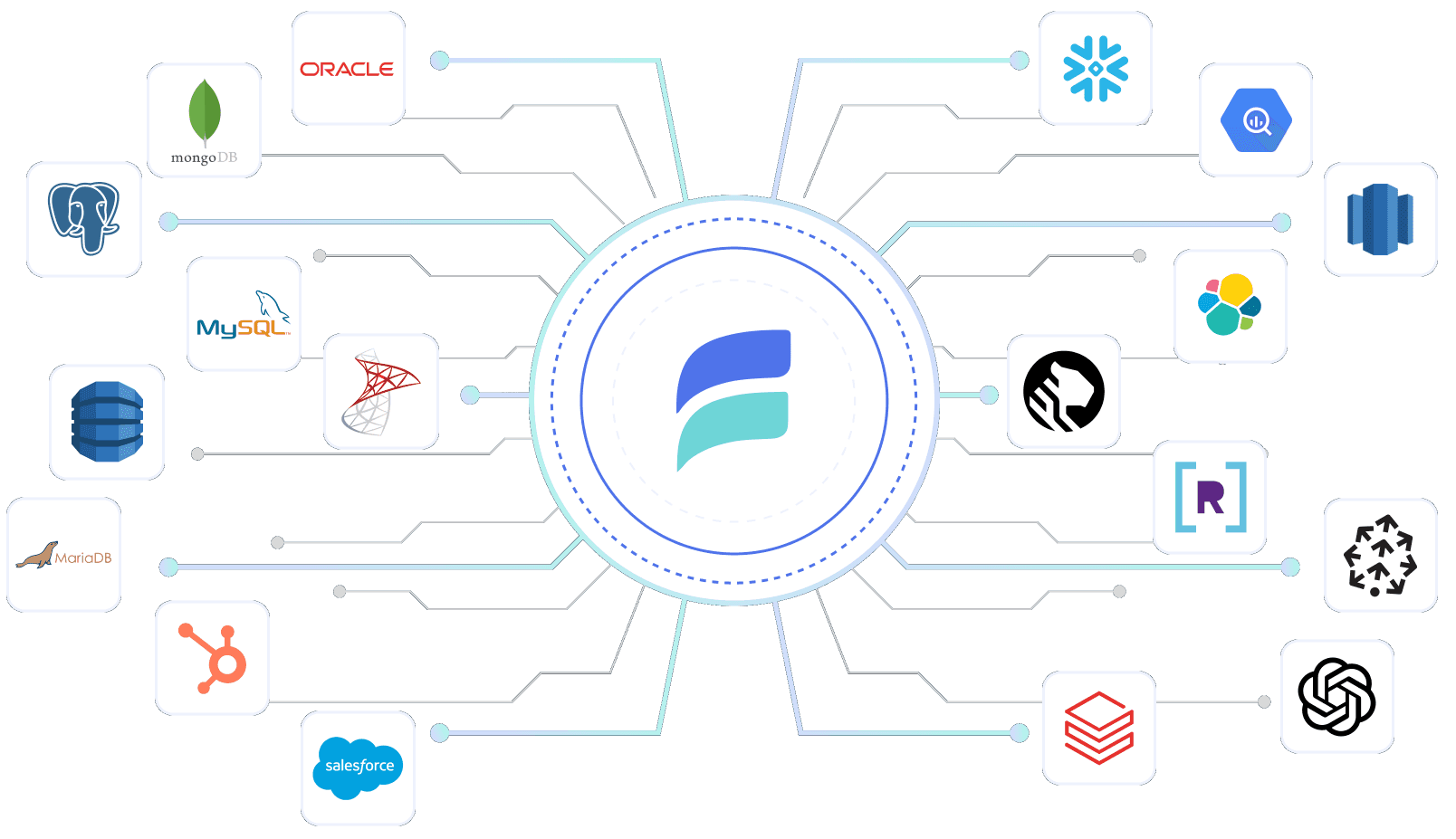

Estuary Flow stands out because it brings together the best of CDC, real-time, and batch with modern data engineering best practices, enabling the best of both worlds, without managing infrastructure.

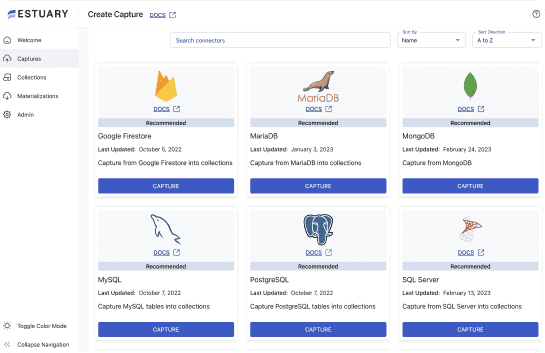

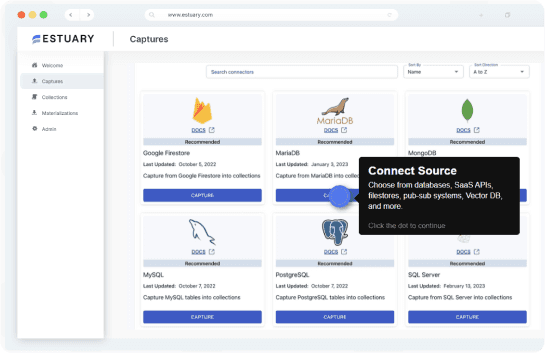

No-code connectors

Connect apps, analytics, and AI using 100s of streaming CDC, real-time, and batch no-code connectors built by Estuary for speed and scale.

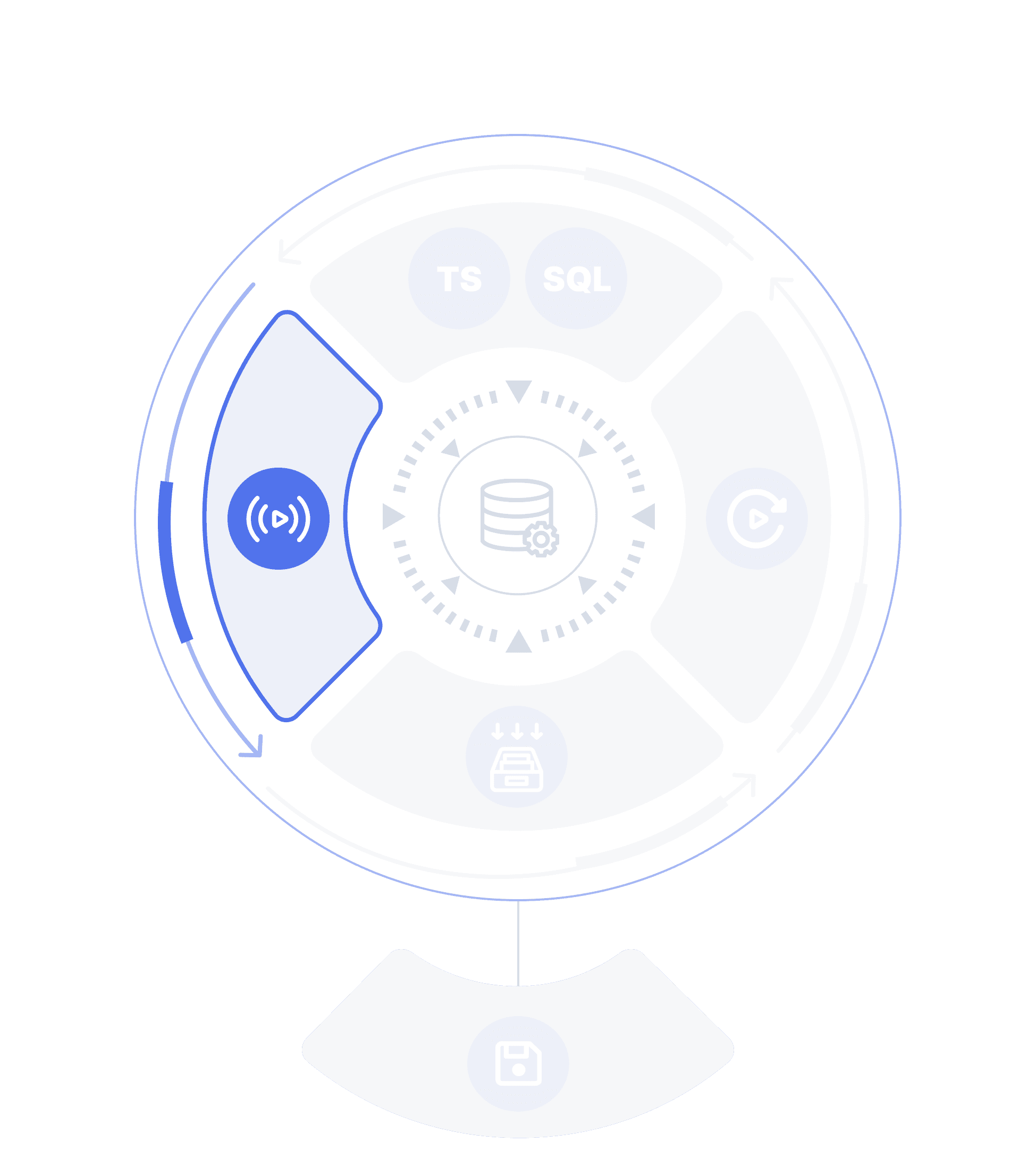

End-to-end CDC

Perform end-to-end streaming CDC.

- Stream transaction logs + incremental backfill.

- Capture change data to a collection.

- Reuse for transformations or destinations.

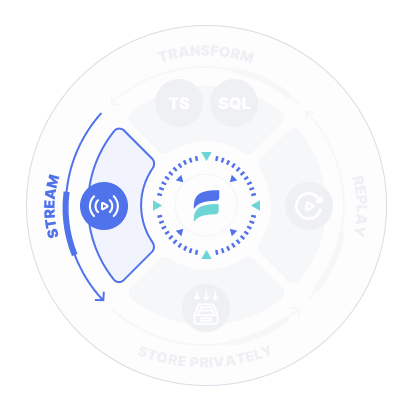

Kafka compatibility

Use Flow Dekaf to connect any Kafka-compatible destination to Flow as if it were a Kafka cluster via the destination's existing Kafka consumer API support.

Real-time and batch

Connect apps, analytics, and AI using 100s of streaming CDC, real-time, and batch no-code connectors built by Estuary for speed and scale.

Private storage

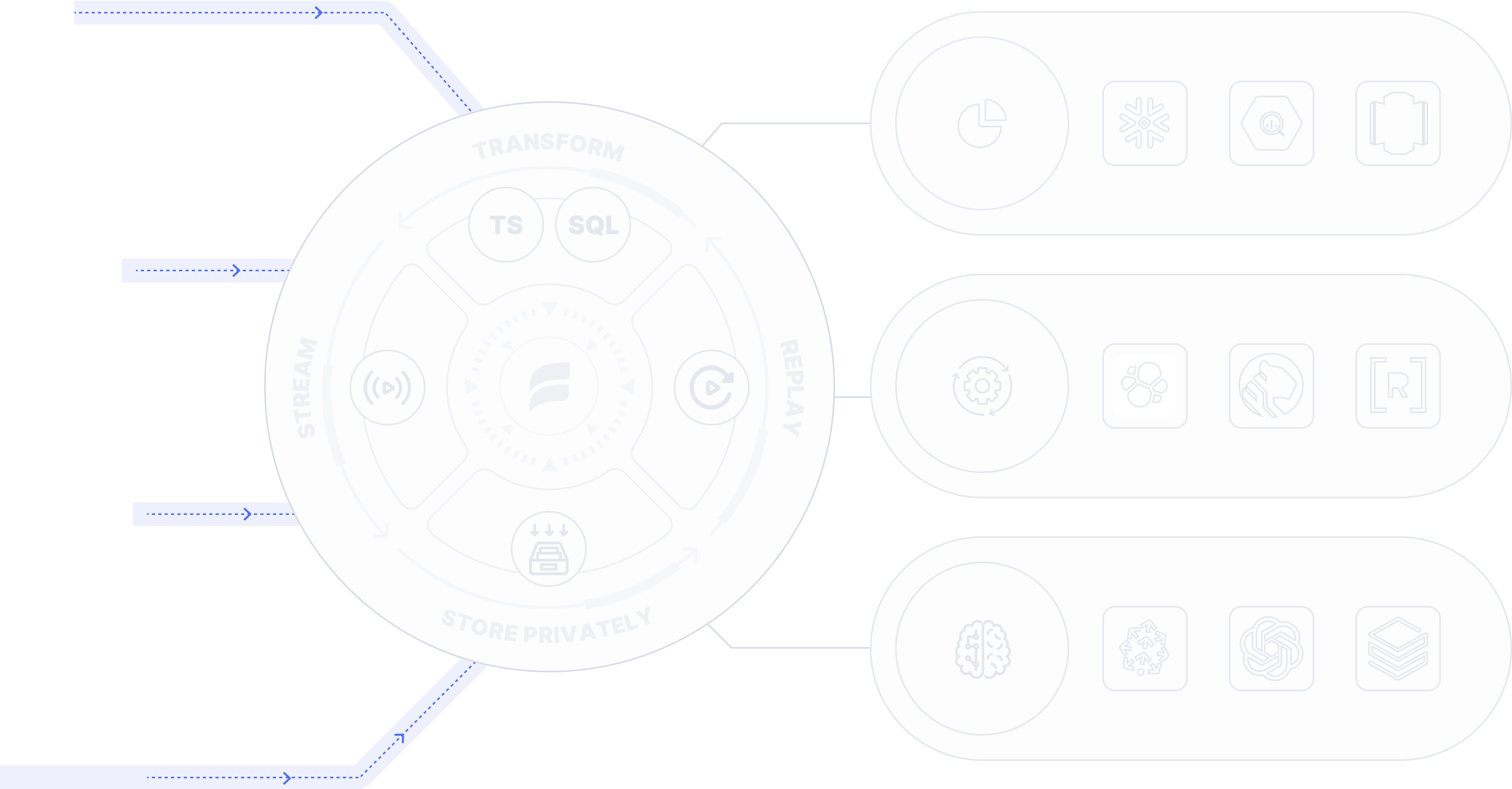

As you capture data, Flow automatically stores each stream as a reusable collection, like a Kafka topic but with unlimited storage. It is a durable append-only transaction log stored in your own private account so you can set security rules and encryption.

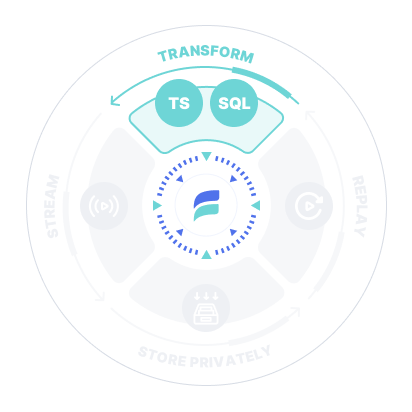

ELT and ETL

Transform and derive data in real-time (ETL), using SQL or Typescript for operations, or use dbt to transform data (ELT) for analytics.

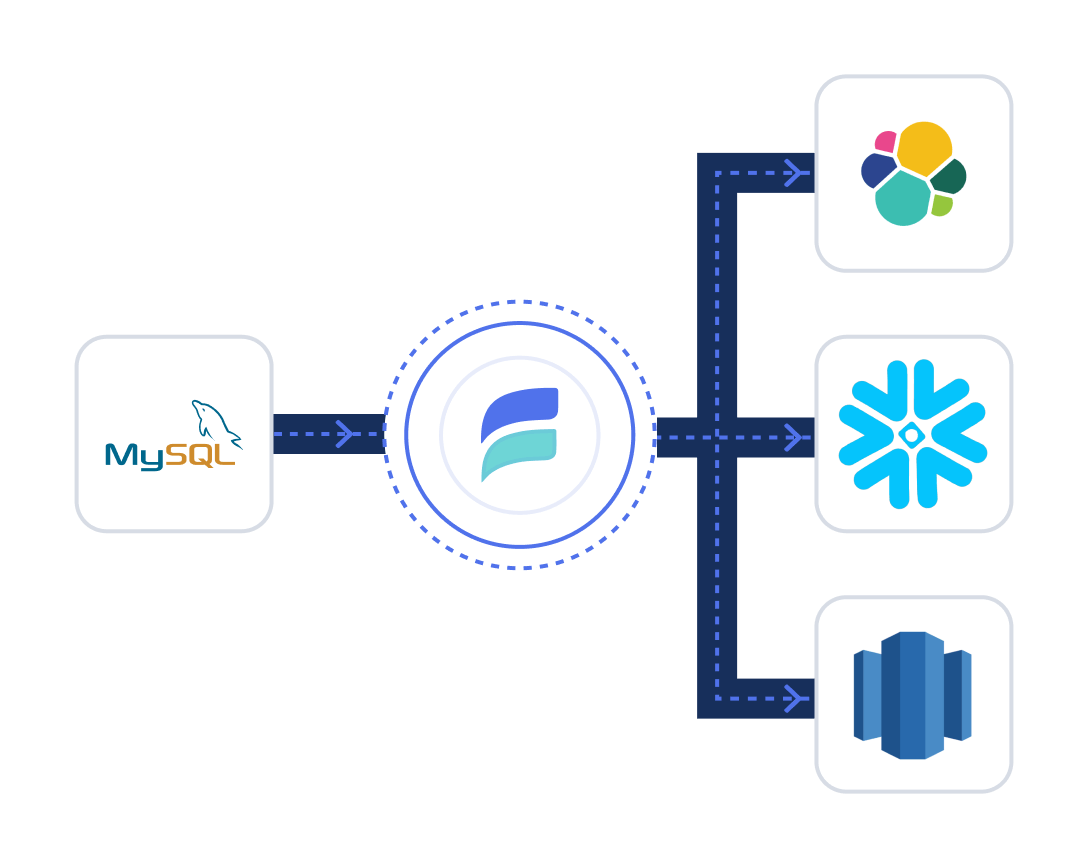

Many to many

Move data from many sources to collections, then to many destinations all at once. Share and reuse data across projects, or replace sources and destinations without impacting others.

Backfill and replay

Reuse collections to backfill destinations enabling fast and effective one-to-many distribution, streaming transformations and time travel, at any time.

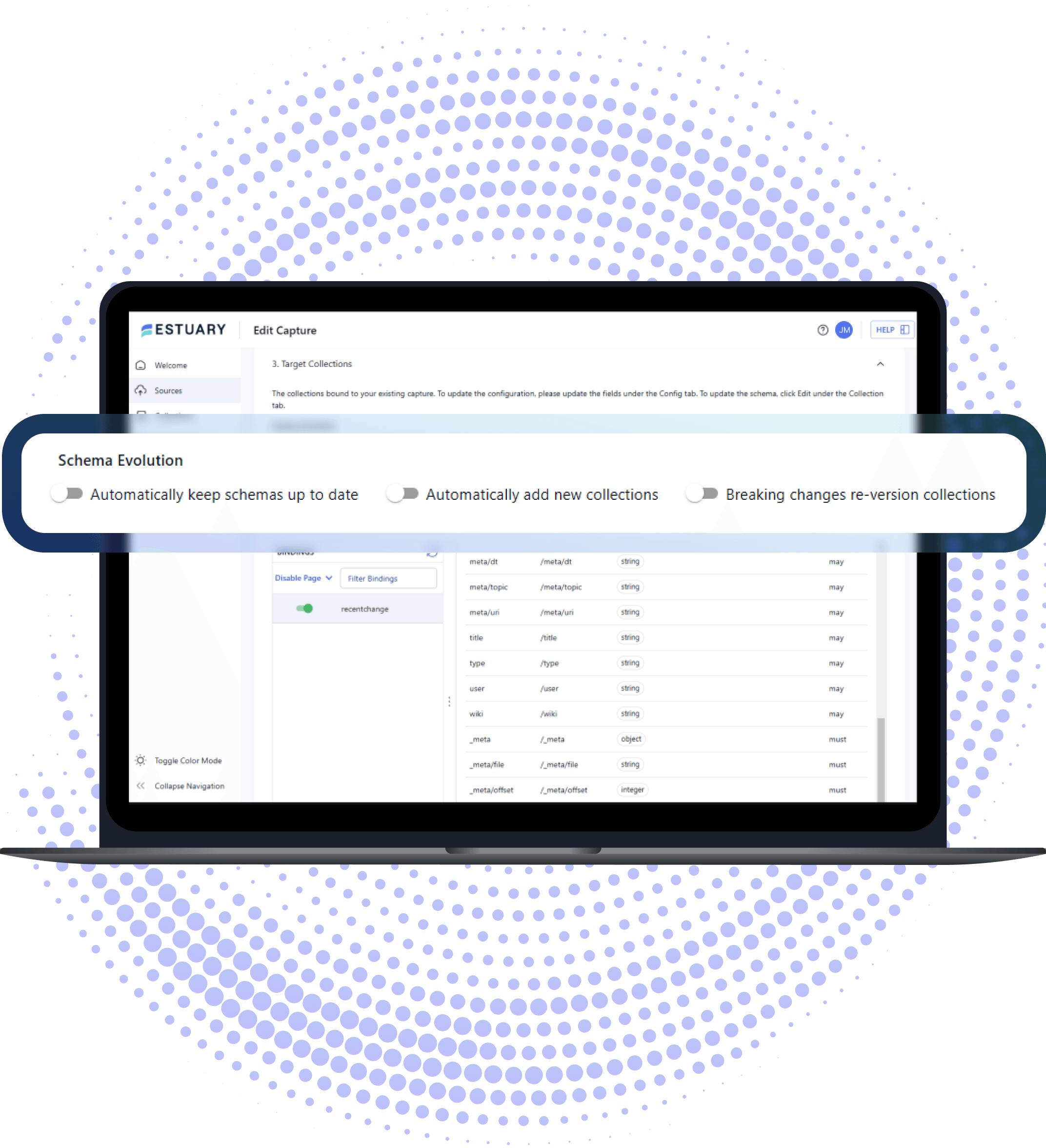

Schema evolution

Automatically inferred and managed from source to destination using schema evolution.

- Automated downstream updates.

- Continuous data validation and testing.

CLI and API Automation using flowctl.

Multi-cloud deployment

Deploy each capture, SQL or TypeScript task, and materialization of a single pipeline in the same or different public or private clouds and regions.

CREATE A DATA PIPELINE IN MINUTES

Build new data pipelines that connect many sources to many destinations in minutes.

1

Add 100s of sources and destinations using no-code connectors for streaming CDC, real-time, batch, and SaaS. (see connectors).

2

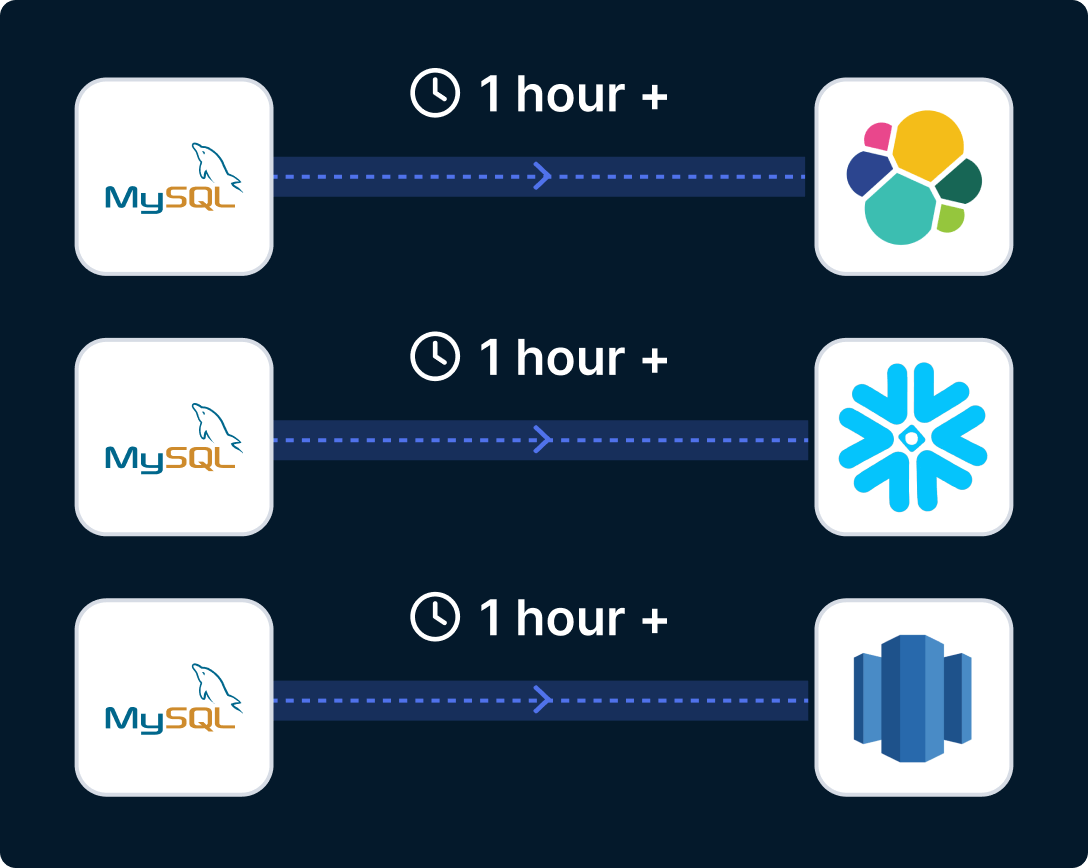

Choose any speed for each connection from real-time to hour+ batch; schedule fast updates when you need them to save money.

3

Write in-place updates or the full change history into a destination.

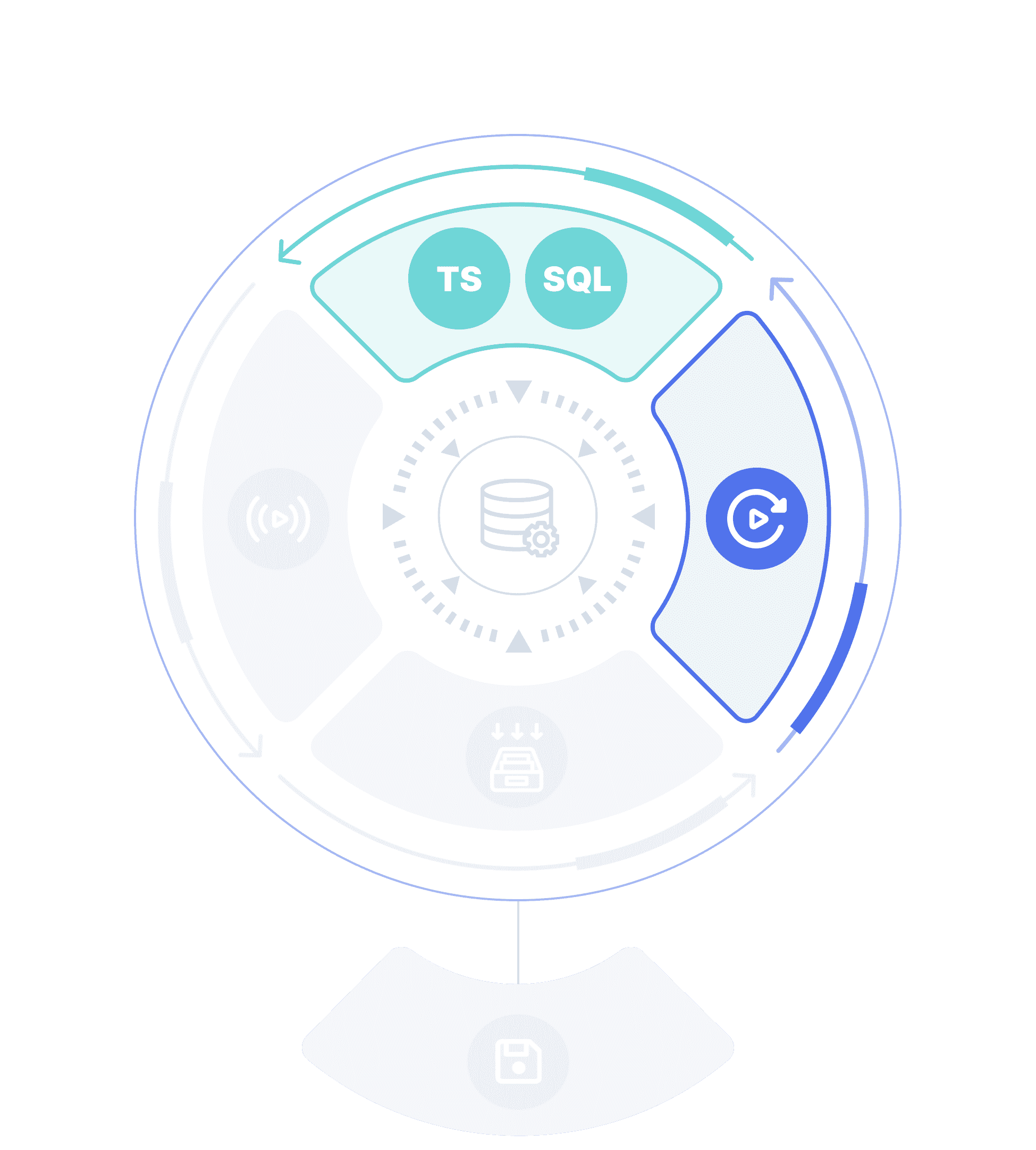

CONFIGURE OR CODE

Choose the best combination of no-code configuration and coding to move and transform data.

Use 100s of no-code connectors for apps, DBs, DWs, and more.

Use the Flow UI to build without coding, or the flowctl CLI for development.

Transform using Streaming SQL (ETL) and Typescript (ETL) or dbt (ELT) in your warehouse.

USE MODERN DATAOPS

Rely on built-in data pipeline best practices, integrate tooling, and automate DataOps to improve productivity and reduce downtime.

Automate DataOps and integrate with other tooling using the flowctl CLI.

Use built-in pipeline testing to validate data and pipeline flows automatically.

Select advanced schema detection and automate schema evolution.

INCREASE PRODUCTIVITY, LOWER COSTS

Be 4x more productive and focus more new development, less on troubleshooting.

Spend 2-5x less with low, predictable pricing (see pricing.)

Minimize source loads and costs by extracting data only once from each source.

Lower destination costs by using real-time extraction with batch loading. Then schedule faster updates only when you need them.

CONNECT&GO

Connect&GO lowers MySQL to Snowflake latency up to 180x, improves productivity 4x with Estuary.

TRUE PLATFORM

True Platform reduced its data pipeline spend by >2x and discovered seamless, scalable data movement.

SOLI & COMPANY

Soli & Company trusts Estuary's approachable pricing and quick setup to deliver change data capture solutions.

DELIVER REAL-TIME DATA AT SCALE

Estuary Flow delivers reliable, real-time performance in production for over 3,000 active users, including some the most demanding workloads proven to 10x the scale of the alternatives.

Stream in real-time at any scale, running over 7GB/sec in production just for 1 customer.

Ensure data is never lost with exactly-once transactionally storage and delivery.

Use built-in monitoring and alerting, and active-active load balancing and failover.

7+GB/sec

Single dataflow

3000+

Active users

<100ms

Latency

SECURE YOUR DATA

Estuary Flow is designed and tested to make sure your data and your systems stay secure.

Estuary never stores your data.

GDPR, CCPA and CPRA compliant.

SOC 2 Type II certified.

STREAMING ETL VS. BATCH ELT

STREAMING ETL

With Estuary, you extract data exactly and only once using CDC, real-time, or batch; use ELT and ETL; and deliver to many destinations with one pipeline.

HOW ESTUARY FLOW COMPARES

Feature Comparison

| Estuary | Batch ELT/ETL | DIY Python | KAFKA | |

|---|---|---|---|---|

| Price | $ | $$-$$$$ | $-$$$$ | $-$$$$ |

| Speed | <100ms | 5min+ | Varies | <100ms |

| Ease | Analysts can manage | Analysts can manage | Data Engineer | Senior Data Engineer |

| Scale |

READY TO START?

BUILD A PIPELINE

Try out Estuary free, and build a new pipeline in minutes.

SET UP AN APPOINTMENT

Set up an appointment to get a personalized overview.