PostgreSQL, known for its robustness, feature set, and SQL capabilities, is a popular relational database choice for data-driven enterprise applications. On the other hand, DynamoDB is a highly performant NoSQL database that offers outstanding scalability and requires minimal setup and maintenance efforts from developers.

Migrating from PostgreSQL to DynamoDB comes with several key benefits. DynamoDB's serverless architecture allows for effortless scalability, ensuring applications can easily handle rapid growth and fluctuating workloads. DynamoDB's single-digit millisecond latency, combined with scalability, provides businesses instant access to large volumes of data, delivering real-time responsiveness.

In this article, we’ll explore the two popular methods for migrating Postgres to DynamoDB. But, before we delve into the steps and tools, let’s briefly introduce both platforms.

Ready to dive into the migration methods? Click here to skip ahead and explore the step-by-step guide for migrating from PostgreSQL to DynamoDB.

Overview of PostgreSQL

PostgreSQL is a powerful open-source relational database management system (RDBMS) known for its advanced features, extensibility, and performance. It is widely used in various applications ranging from small-scale projects to large enterprise-level systems.

One of the most impressive features of PostgreSQL is its extensibility. You can define your own custom data types beyond the standard integer or text types. You can also write custom functions in different programming languages, such as SQL, Java, Perl, and Python, among others.

Apart from the extensibility feature, Postgres also offers robust support for JSON, allowing you to store, manipulate, and query JSON-formatted data. The JSONB format, more efficient than the standard JSON, offers binary storage for faster querying. With JSON/JSONB support, PostgreSQL combines the features of a document-oriented NoSQL database in a relational database environment.

Overview of DynamoDB

Amazon DynamoDB is a fully managed NoSQL database service provided by Amazon Web Services (AWS). It is designed to offer high scalability, low-latency performance, and seamless handling of massive amounts of data.

DynamoDB follows a NoSQL data model, specifically a key-value and document store. Data is stored as items with a primary key, and it supports two types of primary keys: partition key (simple key) and composite key (partition key and sort key).

Among the many essential features of DynamoDB is its Point-In-Time-Recovery (PITR) feature. This allows you to restore your table data from a backup to any point in time, up to the last 35 days. The PITR feature is beneficial in scenarios where you want to recover from unintended deletes or writes.

To capture real-time changes to the database, you can use the DynamoDB Streams feature. It can capture data modification events, including adds, deletes, and updates. Each event is stored in a record and presented in a stream. Such streams can be read to gain insights into data changes. Streams help build event-driven applications and keep multiple data sources in sync.

2 Reliable Methods for Migrating Postgres to DynamoDB

There are two methods for migrating data from Postgres to DynamoDB:

- Method #1: Migrating Postgres to DynamoDB Using SaaS Tools like Estuary

- Method #2: Migrating Postgres to DynamoDB Using AWS DMS

Method #1: Migrating Postgres to DynamoDB Using SaaS Tools Like Estuary

Estuary Flow is a powerful data integration platform that helps you streamline your data workflows effectively. You can use Flow to set up an automated, real-time ETL pipeline that simplifies data extraction from any source, data transformation, and data loading into any destination.

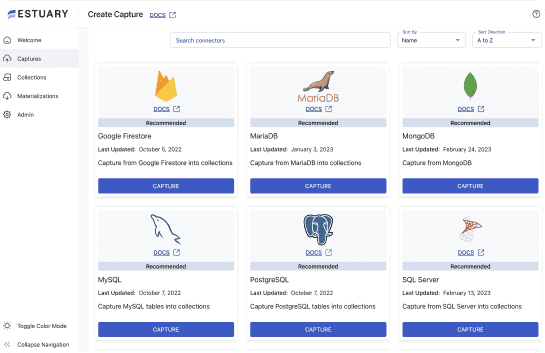

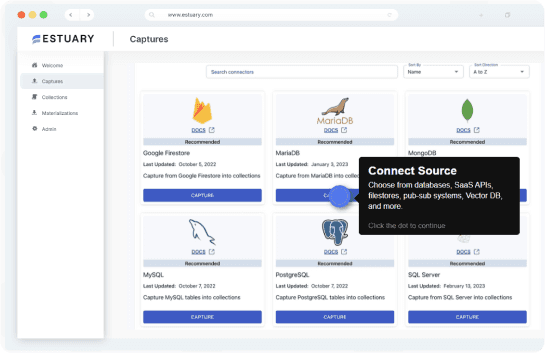

Flow offers a comprehensive array of pre-built connectors for effortless integration between different platforms. Its cloud-native architecture ensures scalability for the seamless handling of large datasets. With its no-code approach, you don’t require in-depth technical expertise to use Estuary Flow.

Here are some of the most significant features of Estuary Flow:

- Pre-built Connectors: Estuary offers 100+ pre-built connectors, including databases, cloud storage, FTP, and more, catering to diverse data sources and destinations. These connectors help streamline the data integration process for quick setup of ETL pipelines.

- Simplified Setup: Estuary's user-friendly interface simplifies the setup and configuration process. The process of deploying data migration pipelines becomes swift and hassle-free. This expedites the time-to-value for data integration projects, ensuring rapid and efficient implementation.

- Scalability: Built on a cloud-native architecture, Estuary exhibits remarkable scalability by supporting active workloads with up to 7GB/s Change Data Capture (CDC). This makes the platform flexible enough to adapt to the dynamic needs of your organization's data landscape during migration.

- Minimal Maintenance: Estuary operates as a fully managed service, minimizing the necessity for manual maintenance. This allows you to focus more on extracting valuable data insights rather than diverting efforts toward infrastructure management.

Here's a step-by-step guide to using Estuary Flow to integrate PostgreSQL and DynamoDB:

Prerequisites:

Step 1: Set Up the PostgreSQL Data Source

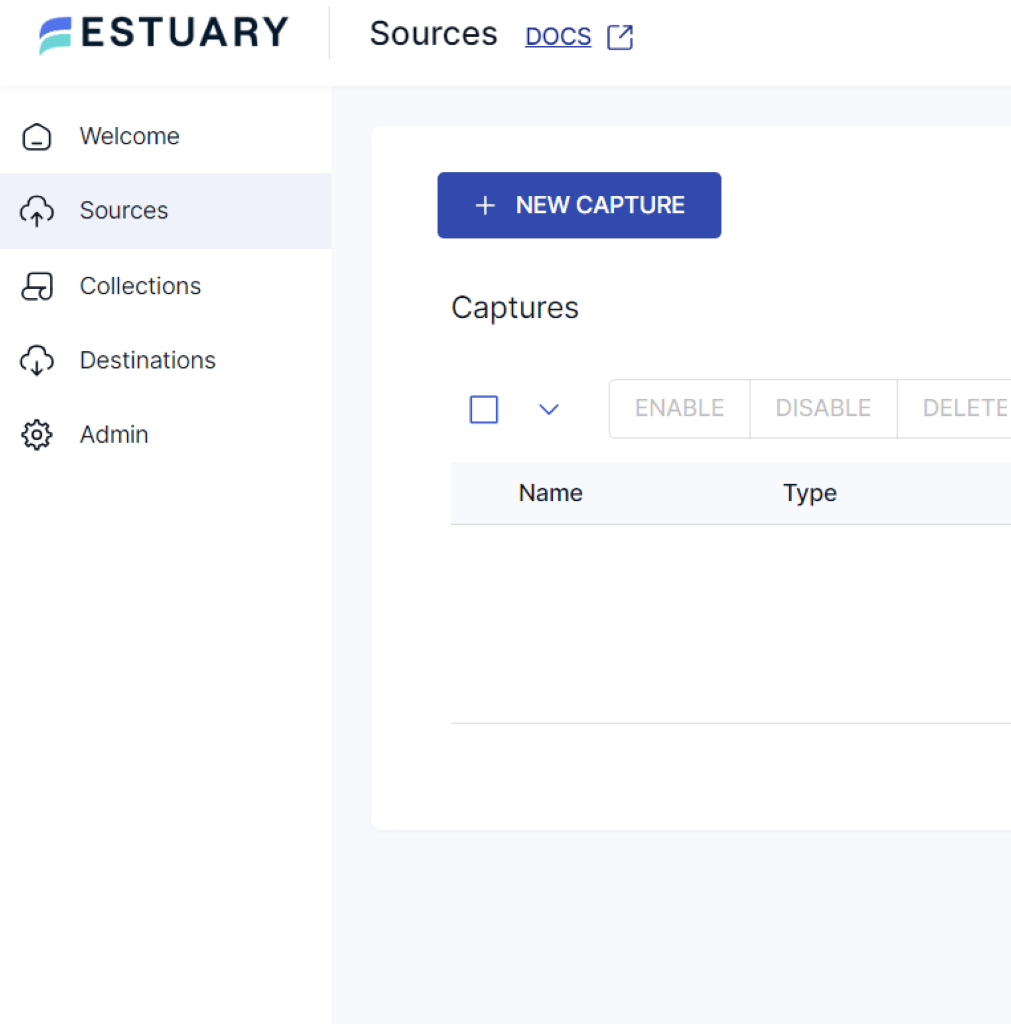

- Sign up for an Estuary Flow account and log in to the platform.

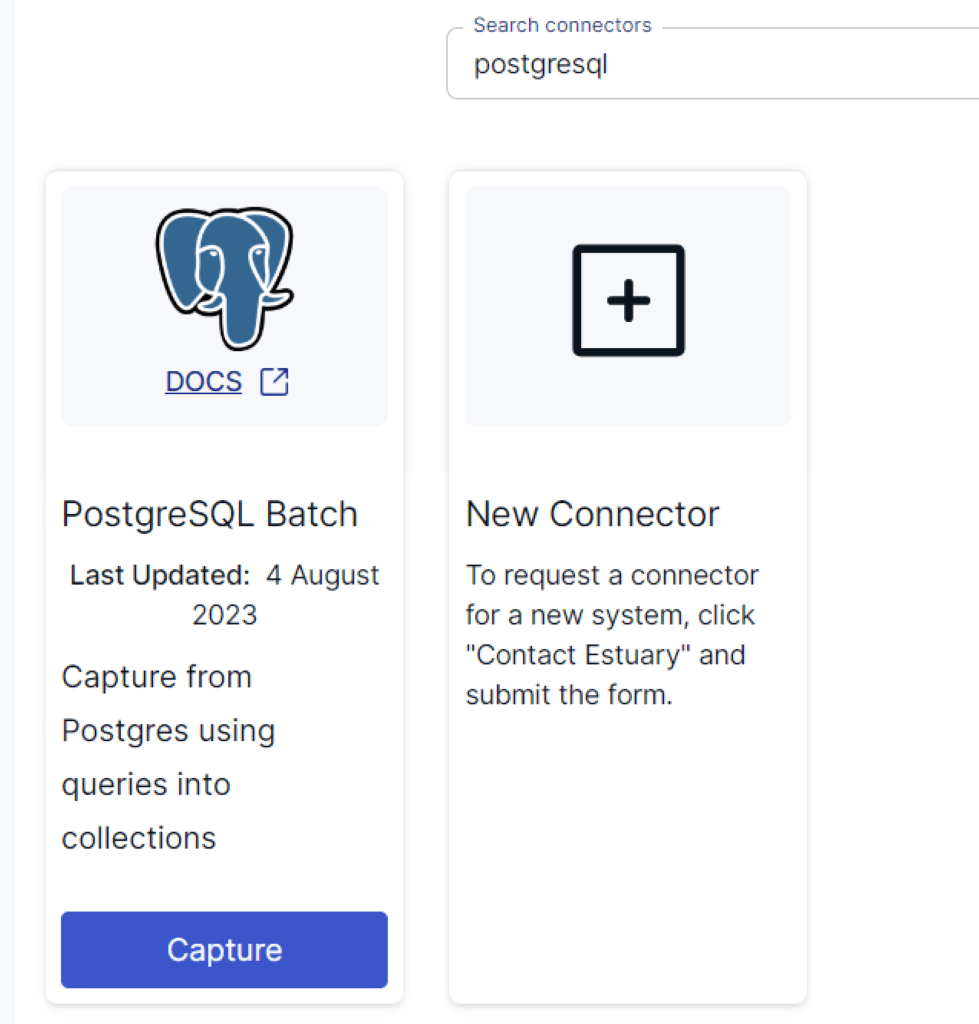

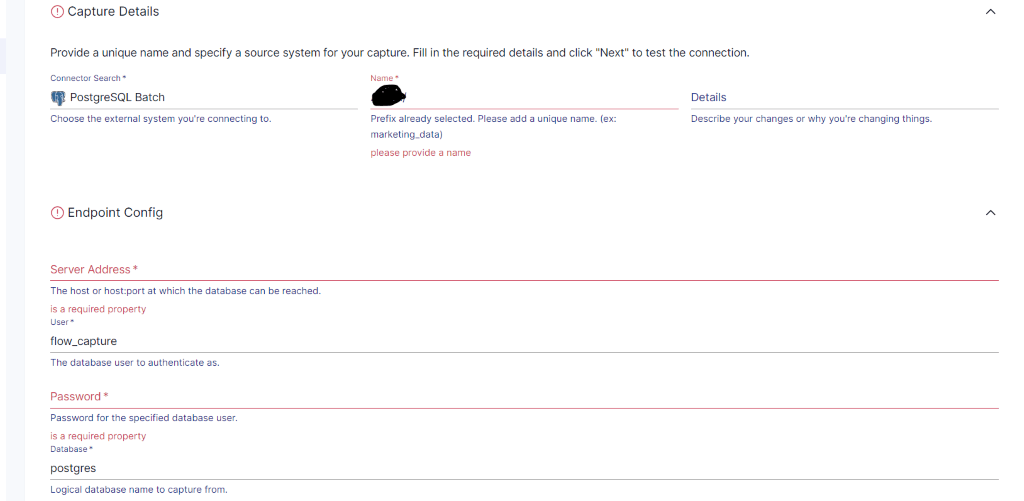

- In the Estuary Flow dashboard, navigate to the Sources section to configure the data source for your data pipeline. Click the + NEW CAPTURE button to initiate the setup process. Use the search bar to find the PostgreSQL connector. When the connector appears in the search results, click on its Capture button to proceed.

- Provide details such as the PostgreSQL Server Address, Username, Password, and Directory. Click on the NEXT button to proceed.

- Now, click on SAVE AND PUBLISH to finish configuring the PostgreSQL connector.

Step 2: Set Up the DynamoDB Destination

- To configure the destination end of the pipeline, click on Destinations in the Estuary Flow dashboard.

- Next, click on the + NEW MATERIALIZATION button. Use the search box to find the DynamoDB connector on the Create Materialization page. Click on the Materialization button of the DynamoDB connector to proceed.

- Provide the necessary details for the DynamoDB connector and click on NEXT to proceed.

- If the data captured from Postgres wasn’t auto-filled, you can add the data from the Source Collections section. This allows you to select the exact collections that you want to transfer into DynamoDB.

- Now, click on SAVE AND PUBLISH to finish the DynamoDB connector setup.

This completes the process of PostgreSQL to DynamoDB integration. For more information, refer to the Estuary documentation:

Method #2: Migrating PostgreSQL to DynamoDB Using AWS Database Migration Service (DMS)

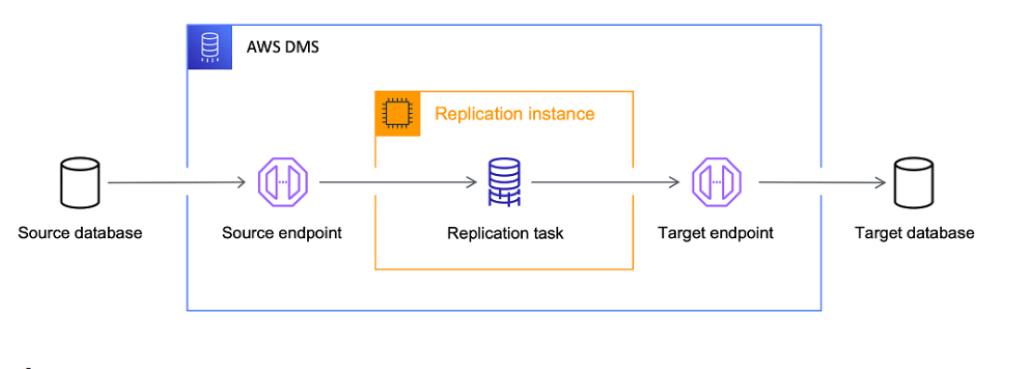

To migrate Postgres to DynamoDB using AWS DMS, first, you must configure a replication instance and create source and target endpoints. Then, design a replication task to specify data transfer paths and customize settings. Once the replication task is configured, AWS DMS will start migrating data from Postgres to DynamoDB.

Let's look into the step-by-step breakdown of the process:

Note- To use AWS DMS, you need one endpoint on an AWS service. But remember, you can't move data between two on-premise databases using AWS DMS.

Step 1: Configuring the Replication Instance

- Log in to your AWS Management account and navigate to the AWS Database Migration Service (DMS) console.

- Now, create a new replication instance that acts as the migration server. Set up the replication instance by configuring parameters, such as instance size and network configurations, and assigning IAM roles for access permissions. You can learn more about the replication instances here.

Step 2: Creating the Source Endpoint

- Once the replication instance is set up, create a source endpoint for the PostgreSQL database. This source endpoint requires connection details such as the address, port number, username, password, and database name.

- These credentials will allow AWS DMS to establish a secure connection to the PostgreSQL source. Refer to this document to understand the process of creating an endpoint for the migration process.

Step 3: Creating the Target Endpoint

- Now, create a target endpoint for DynamoDB. You must create a new endpoint by specifying the region and necessary credentials to connect to the DynamoDB table.

Step 4: Creating a Replication Task

- Create a replication task with the established source and target endpoints. This task will define the source and target endpoints, allowing AWS DMS to determine the data transfer path.

- During the configuration of the replication task, you can select specific tables or schemas to migrate PostgreSQL data to DynamoDB. Additionally, you can customize settings, such as table mappings, data type conversions, and validation options, to ensure compatibility between the source and target databases.

Step 5: Monitoring and Validating the Migration Process

- Once the replication task is fully configured, AWS DMS will begin copying data from the source PostgreSQL database to the target DynamoDB table. The migration process will continue until all selected data is replicated. AWS DMS ensures data consistency between the two databases.

- You can monitor the migration progress, track the number of records copied, and identify potential errors or issues through the AWS DMS console.

- After successful completion of the replication task, you should validate the imported data in DynamoDB to ensure accuracy and completeness. This validation step is crucial to confirm that the data in DynamoDB matches the data from PostgreSQL. You can refer to this document to understand the validation process.

The Takeaway

Migrating from PostgreSQL to DynamoDB involves transitioning from a relational database to a NoSQL database. This migration is associated with benefits, such as improved scalability, flexibility, real-time data analysis, and seamless integration with other AWS services.

To move data from Postgres to DynamoDB, you can use SaaS tools like Estuary Flow or the manual AWS DMS method. Both methods are currently working and reliable options for replicating your data.

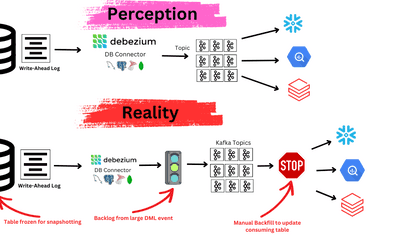

However, AWS DMS has limitations, including potential complexities in setting up and configuring the replication task and its dependency on specific AWS infrastructure. These are factors to keep in mind when building and maintaining your data pipeline.

Data integration platforms like Estuary present a more user-friendly approach with its automated, no-code integration capabilities. Estuary's pre-built connectors and no-code environment simplify integration, significantly reducing setup time and maintenance efforts. Overall, Estuary's streamlined process and accessibility make it an excellent choice for a seamless PostgreSQL to DynamoDB integration solution.

Try Estuary Flow—a seamless, no-code, data integration solution. It allows you to connect data from various sources to destinations, which helps reduce time and effort when moving your data.