Organizations today access data from different sources to get better insights into their business operations and make informed decisions. This data, which is in scattered form, needs to be consolidated and processed to get better insights.

Building ETL data pipelines makes it simpler to achieve this. ETL data pipelines extract data from some source, transform it, and load it at suitable destinations. This improves data quality, accessibility, and workflows. There are numerous reliable data pipeline-building tools that help unlock the full potential of your data.

Logstash is a widely used tool for log processing and data ingestion. As a part of Elastic Stack, it is a well-known tool for building ETL data pipelines. But, in the ever-evolving landscape of data analytics, organizations require tools that can be customized and tailored according to their needs. Thus, the need for Logstash alternatives arises.

Elasticsearch is also part of Elastic Stack and is a go-to destination for data pipelines. It is a search and analytics engine that draws real-time insights from data. This article will explore two methods of building an ETL data pipeline for Elasticsearch.

Logstash Overview

Logstash is an open-source data processing pipeline that is part of the ELK Stack. It is designed to collect, process, and ingest data from various sources. Logstash can help you transform the data before sending it to the platform of your choice, such as Elasticsearch. The tool collects data in varied forms, such as logs, databases, or APIs, and filters it. Then, your dataset is migrated to your selected destination for storage and analysis.

Key features of Logstash include:

- Versatile Data Ingestion: Logstash supports a variety of input plugins, which helps it to load data in different forms, such as log, database, syslog, etc. This makes Logstash a versatile tool for data ingestion.

- Efficient Filters: Logstash includes a diverse range of in-built filters that perform tasks such as parsing, geo-location, anonymization, and other transformations. Thus, you get access to efficient tools to perform data transformation processes as per your choice.

- Alert and Monitoring: Based on certain conditions in data, Logstash can be configured to monitor and generate alerts if any change occurs in it. With this feature, you can always keep track of any updates in the data.

Elasticsearch Overview

Elasticsearch is a search and analytics engine. It is an open-source tool and is part of the Elastic Stack, to which Logstash and Kibana also belong. Elasticsearch is designed to deal with large volumes of data in real-time.

Key features of Elasticsearch are:

- User Friendly: Elasticsearch allows you to process queries in several different types of query languages, such as structured, unstructured, geo, metric, etc. It is also efficient in full-text searches and enables you to search large volumes of text-type data.

- RESTful API: Elasticsearch provides a RESTful API, which helps you interact with the system using HTTP methods. RESTful API refers to Representational State Transfer API. It provides a framework for network applications as well as communication between client and server. In Elasticsearch, this feature allows you to integrate data with various programming languages and applications.

- Integration With Kibana: Kibana is an open-source data visualization platform. It helps you visualize and analyze data by creating dashboards, charts, and maps. Kibana is designed to complement Elasticsearch. This seamless integration helps you to draw and share comprehensive insights from visually appealing data.

How to Use Logstash to Build ETL Pipeline for Elasticsearch

Building an ETL data pipeline in Logstash involves configuring tools to ingest data from various sources, transform it, and load it to an appropriate destination. Here are the steps to do so:

Step 1: Installation

Install Logstash on your server by downloading it from its official website.

Step 2: Define the Data Source

Logstash supports a variety of data sources, such as ArcSight, Netflow, MySQL, etc. You can choose any of these to extract your data from it.

Step 3: Data Transformation

Apply the required transformations to the data that is extracted using Logstash filters. You can use Grok, Mutate, Date GeoIP, etc. filters to transform your data. The flexibility and customization facilities of Logstash filters allow you to transform large volumes of data as per your needs.

Step 4: Define Destination

Specify the destination where you want to load your transformed data. Usually, Elasticsearch is the go-to destination for Logstash.

You can run, monitor, and troubleshoot the errors in the data after building the ETL pipeline, too. This way, you have a secure data storage system that enables you to analyze the data whenever required.

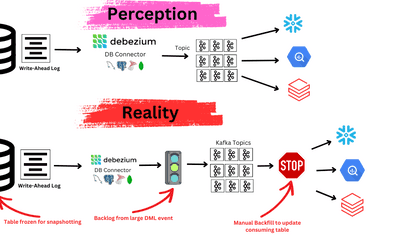

The Need for Logstash Alternative

Logstash is a robust tool for data ingestion and processing. However, sometimes, you need other tools due to specific requirements or constraints. This gives rise to Logstash alternatives that provide you with more features or ease the process of migrating data.

Some of the prominent reasons to find Logstash alternatives are:

- Consumption of Resources: Logstash is a part of Elastic Stack, which consumes a high share of system resources. This makes it difficult to deal with, especially when the volume of data is large. Thus, the need to find lightweight Logstash alternatives that are not very resource-consuming arises.

- To Cater to Specific Needs: Sometimes, alternatives are better suited to meet your specific requirements. These can include demand patterns prevalent in the industry, clients' needs, or more variety and number of data sources.

- Ease of Use: Some users who do not have sound technical knowledge may find the configuration of Logstash to be complex and difficult to use. Thus, they may look for simpler Logstash alternatives that are easy to adopt and implement.

Easy Ways to Build an ETL Data Pipeline for Elasticsearch

There are two ways to build an ETL pipeline for Elasticsearch:

- Method 1: Involves No-Code Tools Like Estuary Flow

- Method 2: Manually creating custom code for ETL processes

Method 1: Using Estuary Flow to Build ETL Data Pipeline for Elasticsearch

Estuary Flow is an efficient tool for constructing ETL data pipelines, which means that users can effectively simplify the data integration processes. With the help of Estuary Flow, you can easily ingest data into Elasticsearch by extracting, transforming, and loading data, making it easy to analyze and search without the need for complex scripts.

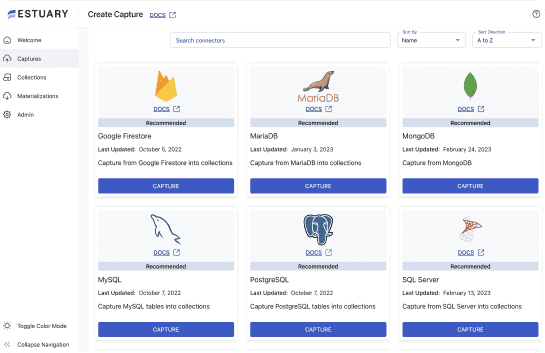

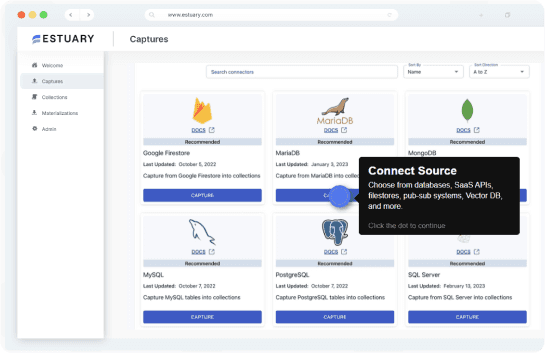

Flow comes with a friendly user interface and great features, including near real-time synchronization and over 300 in-built connectors. Follow the simple steps below to build your ETL data pipeline for Elasticsearch using Estuary Flow:

Prerequisites

Step 1: Establishing a Source

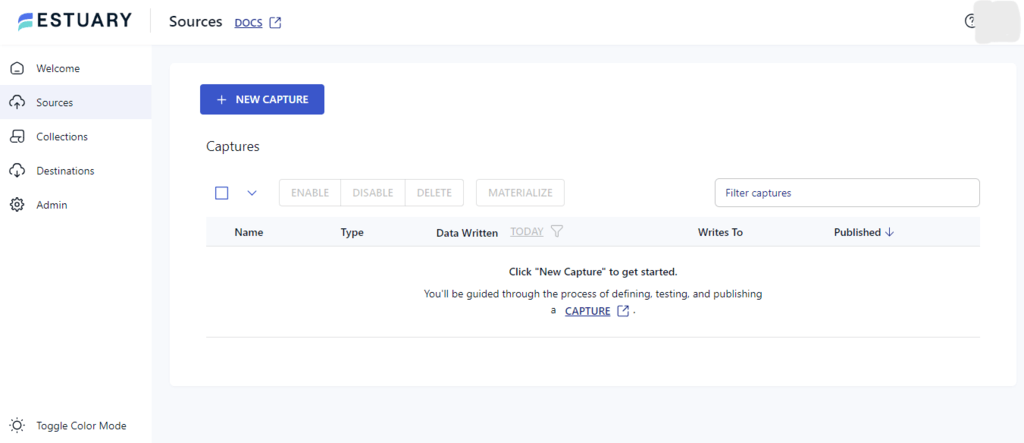

- On the main dashboard of Estuary Flow, click Sources on the left.

- On the Sources page, click on the +NEW CAPTURE button on the top left of the page.

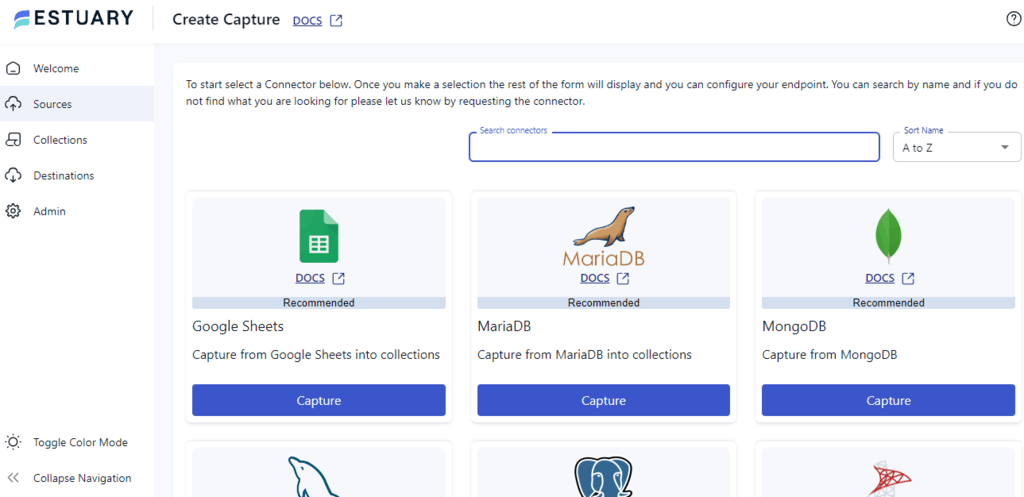

- Estuary Flow offers a large library of sources. Type the name of the source that you wish to connect to in the Search Connectors bar and click on the CAPTURE button below it.

Step 2: Connect Elasticsearch as Destination

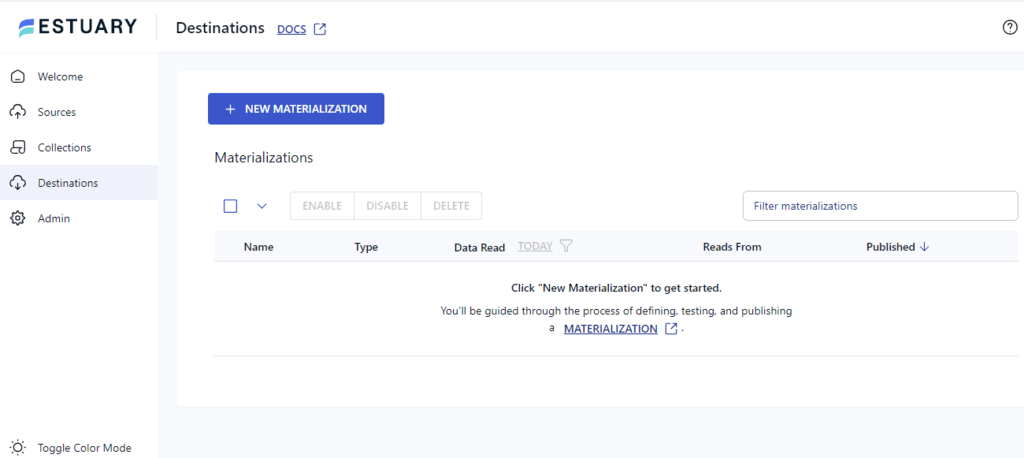

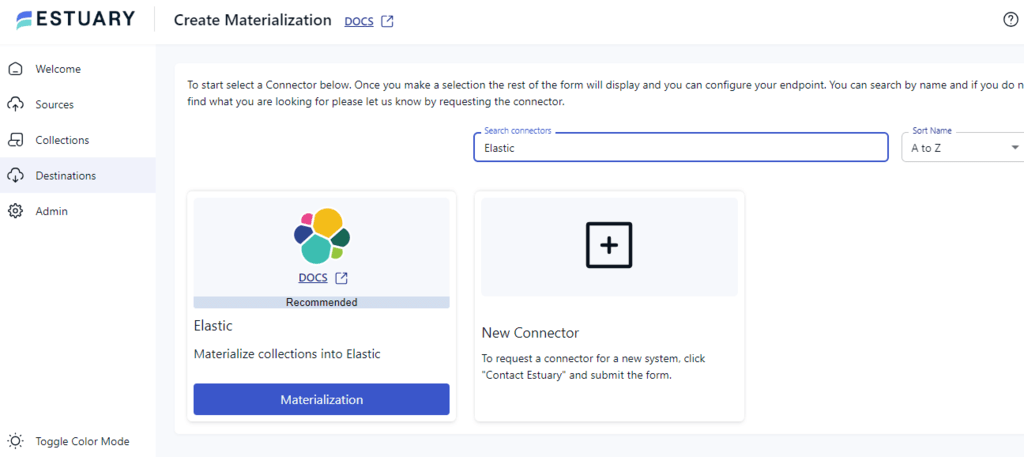

- On the dashboard of Estuary Flow, click on Destinations on the left side. Click on the +NEW MATERIALIZATION button on the top left.

- In the Search Connectors box, type Elastic and click on the Materialization button below Elastic.

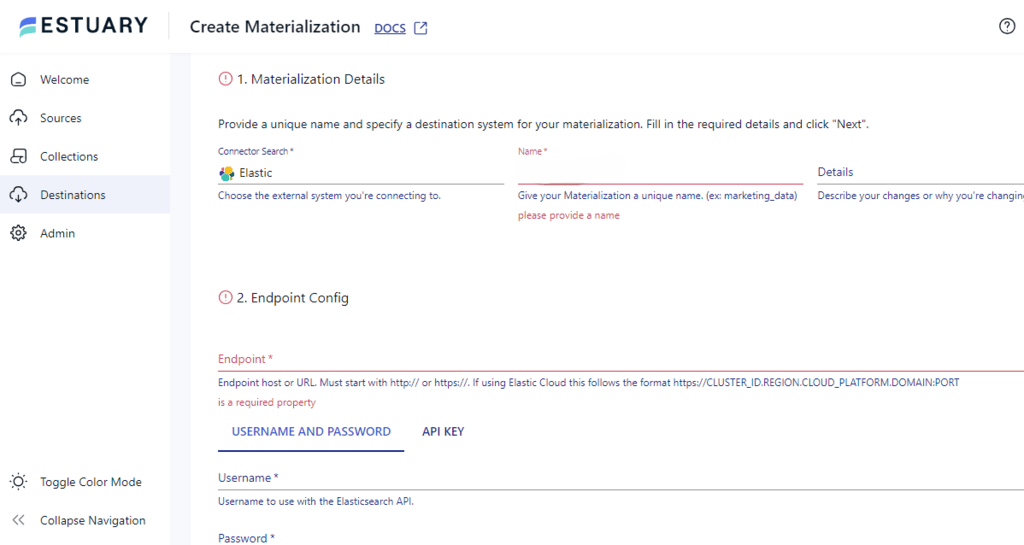

- On the Create Materialization page, enter Details such as Name, Endpoint_URL, Username, Password, etc.

Advantages of Using Estuary Flow

- Code-Free Configuration: You can build an ETL data pipeline using Estuary Flow without any knowledge of coding. The platform provides a code-free interface that is user-friendly and accessible to all.

- Reusability and Modularity: It enables reusability and modularity of tasks. You can encapsulate specific ETL logic into reusable tasks, making it easier to maintain, update, and share components across different workflows.

- Scheduling and Automation: Flow provides you with the option of scheduling workflow. Once it is set, the platform runs your workflows at specific time intervals. It also provides automation features that help you set up recurring ETL processes to ensure data is processed and updated regularly.

- Built-in Connectors: Flow provides a wide range of built-in connectors that act as source and destination for the data integration process.

Method 2: Using Manual Method to Build ETL Data Pipeline for Elasticsearch Through Custom Code

To build an ETL data pipeline for Elasticsearch using custom code, you need to extract data from a CSV file as a source. Then, you must transform and load it in Elasticsearch.

Step 1: Install Destination

First, you must install Python to interact with Elasticsearch. By running the code below, you will be able to install the ‘elasticseach’ library in your Python environment.

plaintextpip install elasticsearchStep 2: Import Libraries

You must import the required libraries, as they can assist you in loading data into Elasticsearch.

plaintextimport pandas as pd

from elasticsearch import Elasticsearch Step 3: Extract Data From CSV

Using the Pandas DataFrame, you can read and extract data from the CSV source file.

plaintextdf = pd.read_csv("data.csv")Step 4: Transform the Data

Before loading your dataset in Elasticsearch, you can conduct suitable transformations that you deem necessary for standardizing, modifying, or enhancing the data.

Step 5: Migrating Data into Elasticsearch

The final step is to move the data into Elasticsearch. You must define a function to connect Elasticsearch as your destination and make sure you index the transformed data while loading it.

plaintextdef load_data_to_elasticsearch(df, index_name):

es = Elasticsearch([{'host': 'localhost', 'port': 9200}])

for index, row in df.iterrows():

es.index(index=index_name, body=row.to_dict())Disadvantages of using custom code:

- Time-Consuming: Using custom code can be a time-consuming process as it includes writing, testing, and debugging, which requires a significant amount of time.

- Skill Dependency: Using custom code requires any organization to hire and maintain a skilled team of developers.

- Challenges in Scalability: When you are dealing with large volumes of data, it becomes difficult to maintain and optimize the code while also ensuring that it is error-free. Thus, scalability becomes a challenge when we are using custom code to handle large datasets.

Conclusion

Through this article, you have learned how to create an ETL data pipeline for Elasticsearch in two ways. There’s the manual option of building an ETL data pipeline through CSV files and custom code.

However, you can also use no-code, real-time ETL tools like Estuary Flow. Besides being user-friendly, it saves time when building pipelines by offering an extensive library of connectors for configuring the source and the destination.

Ultimately, you can use a wide variety of tools and filters to transform your data before loading it in Elasticsearch. The data can be subsequently retrieved, visualized, and analyzed for better decision-making.

Integrate various data sources and destinations in minutes. Sign up for Estuary Flow to build robust data pipelines without the hassle.

FAQs

Is ElasticSearch an ETL tool?

No, ElasticSearch is not an ETL tool. It is an open-source search and analytics engine used for storing, searching, and analyzing large volumes of data.

What is Logstash used for?

Logstash, a server-side data processing pipeline, is often used as a data pipeline for ElasticSearch. It helps ingest data from multiple sources, transforms it, and then sends it to the desired destination.

What is the difference between Logstash and ElasticSearch?

The key difference between Logstash and ElasticSearch is their functionality. While Logstash is a data processing pipeline tool, ElasticSearch is an efficient search and analytics engine with data storage, searching, and indexing capabilities.