Data pipelines have become the backbone of modern enterprises. They ensure a smooth transfer of data across applications, databases, and other data systems, guaranteeing real-time, secure, and reliable information exchange. When it comes to designing one, the Kafka data pipeline takes center stage.

That said, understanding Kafka can be quite challenging. Besides the intricate architecture, it also involves learning about its operational principles, configuration specifics, and the potential pitfalls in its deployment and maintenance.

In today’s value-packed guide, we’ll discuss the benefits of the Kafka data pipeline and unveil its architecture and inner workings. We'll also look at the components that make up a Kafka data pipeline and provide you with real-world examples and use cases that demonstrate its immense power and versatility.

By the time you complete this article, you will have a solid understanding of the Kafka data pipeline, its architecture, and how it can solve complex data processing challenges.

What Is A Kafka Data Pipeline?

A Kafka data pipeline is a powerful system that harnesses the capabilities of Apache Kafka Connect for seamless streaming and processing of data across different applications, data systems, and data warehouses.

It acts as a central hub for data flow and allows organizations to ingest, transform, and deliver data in real time.

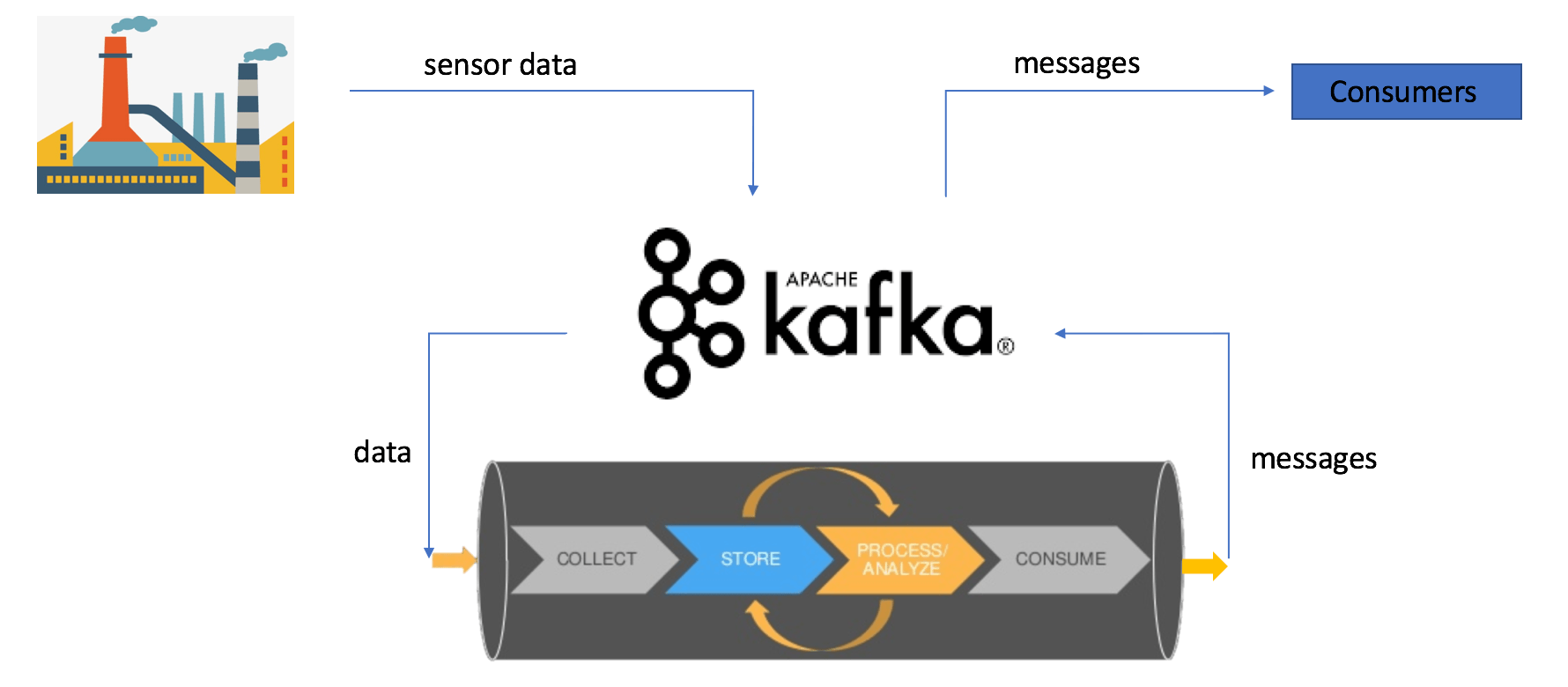

Within a Kafka data pipeline, a series of events take place:

- Data from various sources is collected and sent to Kafka for storage and organization.

- Kafka acts as a high-throughput, fault-tolerant message broker, handling the distribution of data across topics or streams.

- Data is consumed by different applications or systems for processing and analysis.

With a Kafka data pipeline, you can use streaming data for streaming analytics, fraud detection, and log processing, unlocking valuable insights and driving informed decision-making.

Revolutionizing Data Processing: 9 Key Benefits Of Kafka Data Pipelines

Here are 9 major benefits of a Kafka data pipeline:

Real-time Data Streaming

Kafka data pipelines seamlessly ingest, process, and deliver streaming data. It acts as a central nervous system for your data and handles data as it flows in real time.

Scalability & Fault Tolerance

Kafka's distributed architecture provides exceptional scalability and fault tolerance. By using a cluster of nodes, Kafka handles large data volumes and accommodates increasing workloads effortlessly.

Data Integration

Kafka acts as a bridge between various data sources and data consumers. It seamlessly integrates across different systems and applications and collects data from diverse sources such as databases, sensors, social media feeds, and more. It also integrates with various data processing solutions like Apache Hadoop, and cloud-based data warehouses like Amazon Redshift and Google BigQuery.

High Throughput & Low Latency

Kafka data pipelines are designed to handle thousands of messages per second with minimal latency, allowing for near real-time data processing. This makes Kafka perfect for applications that need near-instantaneous data delivery like real-time reporting and monitoring systems.

Durability & Persistence

Kafka stores data in a fault-tolerant and distributed manner so that no data is lost during transit or processing. With Kafka's durable storage mechanism, you can replay a data stream at any point in time, facilitating data recovery, debugging, and reprocessing scenarios.

Data Transformation & Enrichment

With Kafka's integration with popular stream processing frameworks like Apache Kafka Streams and KSQL, you can apply powerful transformations to your data streams in real time. This way, you can cleanse, aggregate, enrich, and filter your data as it flows through the pipeline.

Decoupling Of Data Producers & Consumers

Data producers can push data to Kafka without worrying about the specific consumers and their requirements. Similarly, consumers can subscribe to relevant topics in Kafka and consume the data at their own pace.

Exactly-Once Data Processing

Kafka ensures exactly-once data processing semantics which guarantees that each message in the data stream is processed exactly once, eliminating any duplicate or missing data concerns.

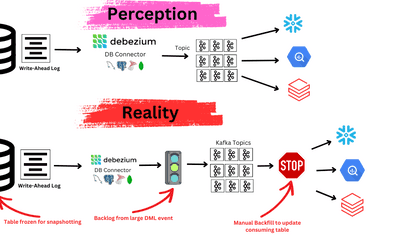

Change Data Capture

Kafka Connect provides connectors that capture data changes from various sources such as databases, message queues, and file systems. These connectors continuously monitor the data sources for any changes and capture them in real time. Once the changes are captured, they are transformed into Kafka messages, ready to be consumed and processed by downstream systems.

Now that we know what Kafka data pipeline is and what its benefits are, it is equally important to understand the architecture of Kafka data pipelines to get a comprehensive understanding of their functionality. Let’s take a look.

Exploring The Dynamic Architecture Of Kafka Data Pipelines

Let’s first understand how Kafka works before getting into the details of the architecture.

Kafka works by receiving and transmitting data as events, known as messages. These messages are organized into topics and are published by data producers while data consumers subscribe to these topics. This structure is often referred to as a pub/sub system due to its core principle of 'publishing' and 'subscribing'

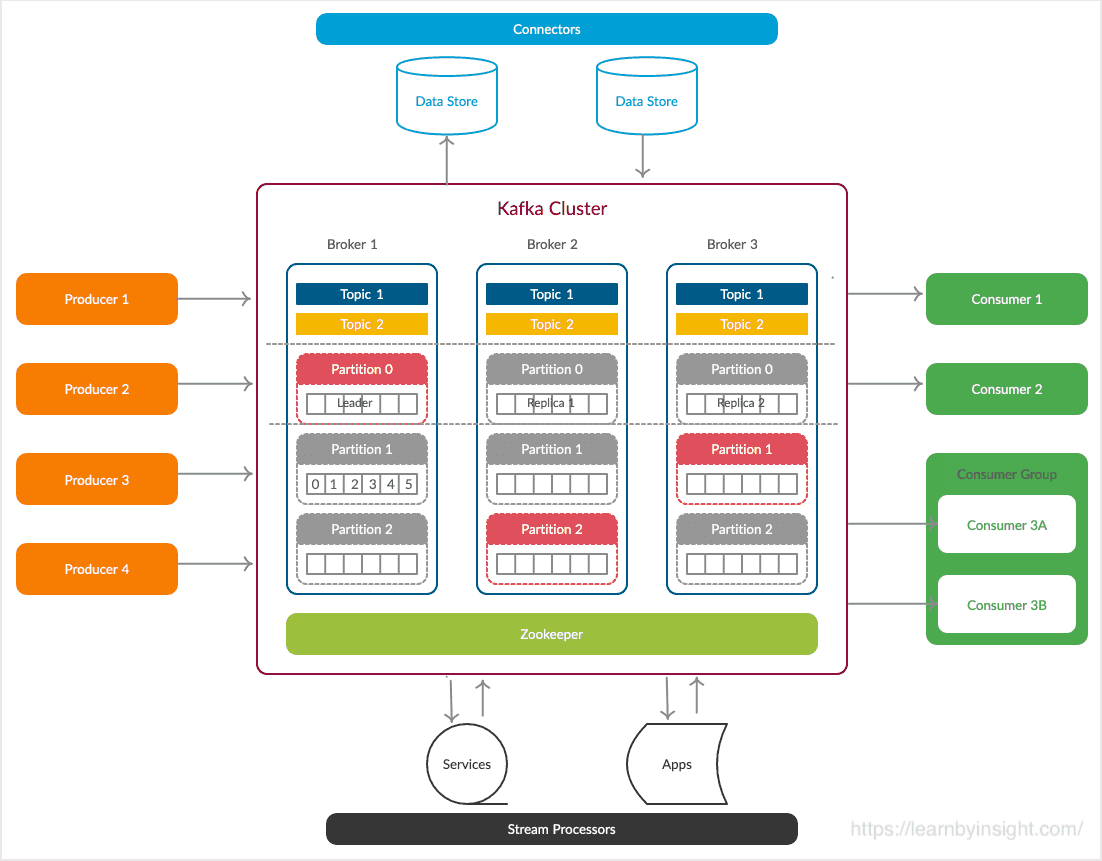

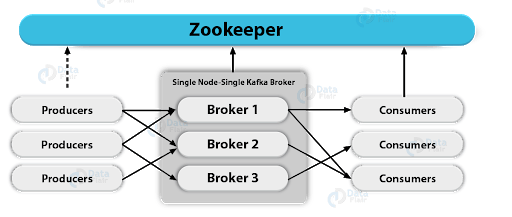

What Is A Kafka Cluster?

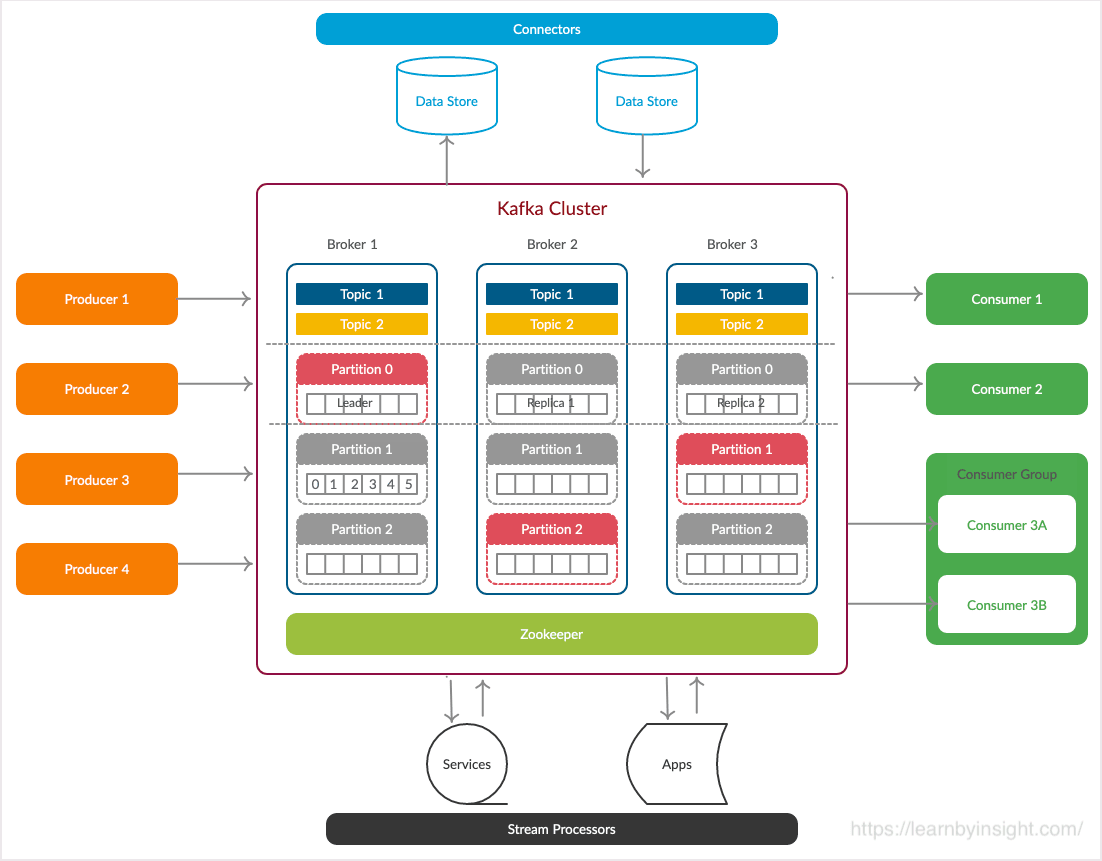

A Kafka cluster is formed by having multiple brokers working together harmoniously. This Kafka cluster allows seamless scalability without any downtime. Its purpose is to efficiently manage the replication of data messages. If, for any reason, the primary cluster experiences a setback, other Kafka clusters will step in and continue delivering the same reliable service without any delay.

Now let's dissect the Kafka Cluster. A Kafka cluster typically comprises 5 core components:

- Kafka Topics and Partitions

- Producers

- Kafka Brokers

- Consumers

- ZooKeeper

Let's discuss these components individually.

Kafka Topic & Partition

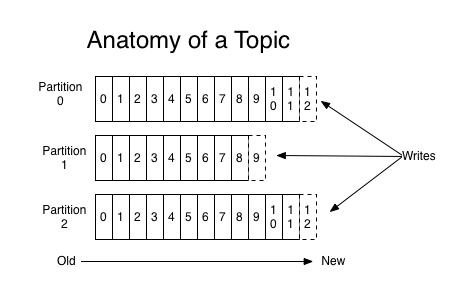

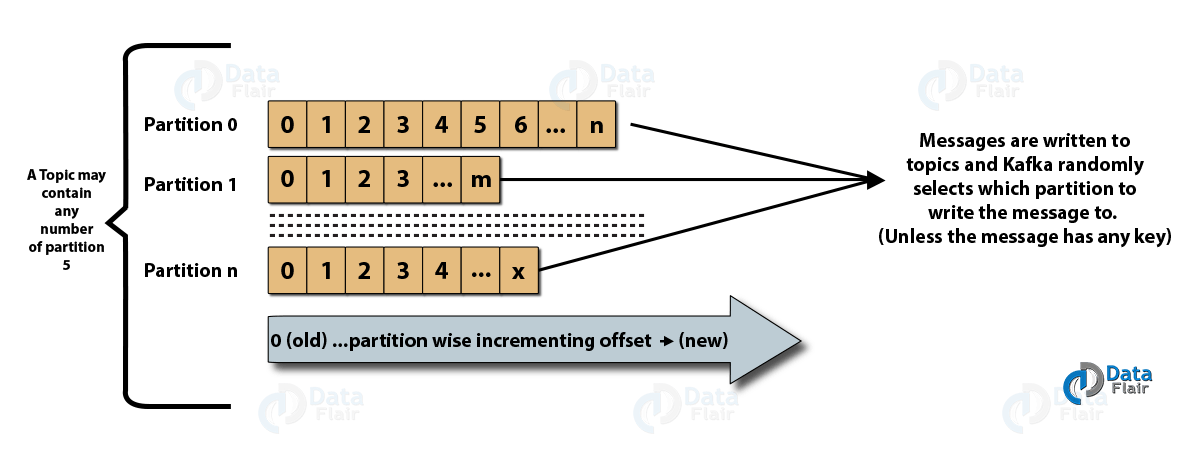

A Kafka topic is a collection of messages categorized under a specific category or feed name. It serves as a way to organize all the records in Kafka. Consumer applications retrieve data from topics while producer applications write data for them.

Topics are divided into partitions so that multiple users can read data simultaneously. These partitions are logically ordered and can be adjusted in number as needed. The partitions are distributed across Kafka cluster servers with each server handling its own data and partition requests.

Messages are sent to specific partitions based on a key, enabling different users to read from the same topic concurrently.

Producers

In Kafka clusters, producers play a vital role in sending or publishing data/messages to topics. By submitting it to Kafka, they help applications store large volumes of data. When a producer sends a record to a topic, it is delivered to the topic's leader.

The leader appends the record to its commit log and increases the record offset. This allows Kafka to keep track of the data piled up in the cluster. Note that producers don't wait for acknowledgment from the broker before delivering messages; they send them as fast as the broker can handle.

Producers should obtain metadata about the Kafka cluster from the broker before sending any reports. This metadata, obtained from Zookeeper, identifies the partition leader and producers always write to the partition leader for efficient data handling.

Kafka Brokers

A broker in Kafka is essentially a server that receives messages from producers, assigns them unique offsets, and stores them on disk. Offsets are crucial for maintaining data consistency and allow consumers to return to the last-consumed message after a failure.

Brokers respond to partition call requests from consumers and provide committed messages. A Kafka cluster consists of multiple brokers to distribute the load and ensure availability. These brokers are stateless and ZooKeeper is used to maintain the state of the cluster.

During the setup of a Kafka system, it's best to consider topic replication. Replication ensures that if a broker goes down, the topic's duplicates from another broker can resolve the issue. Topics can have a replication factor of 2 or more with additional copies stored in separate brokers.

Consumers

In a Kafka data pipeline, consumers are responsible for reading and consuming messages from Kafka clusters. They can choose the starting offset for reading messages, allowing them to join Kafka clusters at any time.

There are 2 types of consumers in Kafka:

Low-Level Consumer

- Specifies topics, partitions, and the desired offset to read from.

- The offset can be fixed or variable to provide fine-grained control over message consumption.

High-Level Consumer (Consumer Groups)

- Consists of one or more consumers working together.

- Consumers in a group collaborate to consume messages from Kafka topics efficiently.

These different consumer categories provide flexibility and efficiency when consuming messages from Kafka clusters, catering to various use cases in data pipelines.

ZooKeeper

ZooKeeper plays a vital role in managing and maintaining Kafka clusters within a data pipeline. It acts as a master management node to store consumer clients' details and information about brokers, topics, and partitions.

Another key function of the zookeeper is to identify crashed brokers and newly added ones. This information helps in maintaining the cluster's stability and availability. ZooKeeper also notifies producers and consumers about the state of the Kafka clusters for efficient coordination with active brokers.

It also tracks the leader for each partition and shares this information with producers and consumers, enabling them to read and write messages effectively. By acting as a central coordinator, ZooKeeper increases the reliability and performance of Kafka data pipelines.

Driving Data Innovation: 5 Inspiring Kafka Data Pipeline Applications

Here are some examples that showcase the versatility and potential of Kafka in action.

Smart Grid-Energy Production & Distribution

A Smart Grid is an advanced electricity network that efficiently integrates the behavior and actions of all stakeholders. Technical challenges of load adjustment, pricing, and peak leveling in smart grids can’t be handled by traditional methods.

One of the key challenges in managing a smart grid is handling the massive influx of streaming data from millions of devices and sensors spread across the grid. A smart grid needs a cloud-native infrastructure that is flexible, scalable, elastic, and reliable. Real-time data integration and processing are also needed in these smart setups.

That's why an increasing number of energy companies are turning to event streaming with the Kafka data pipeline and its ecosystem. With Kafka, we have a high-throughput, fault-tolerant, and scalable platform that acts as a central nervous system for the smart grid.

Whether it's managing the massive influx of data, enabling real-time decision-making, or empowering advanced analytics, Kafka data pipelines make smart grids truly smart.

Healthcare

Kafka is playing an important role in enabling real-time data processing and automation across the healthcare value chain. Kafka's ability to offer a decoupled and scalable infrastructure improves the functionality of healthcare systems. It allows unimpeded integration of diverse systems and data formats.

Let's explore how Kafka is used in healthcare:

- Use Cases in Healthcare: Kafka finds applications for pharmaceuticals, health cybersecurity, patient care, and insurance.

- Real-time Data Processing: Kafka's real-time data processing revolutionizes healthcare by improving efficiency and reducing risks.

- Real-World Deployments: Many healthcare companies are already using Kafka for data streaming. Examples include Optum/UnitedHealth Group, Centene, Bayer, Cerner, Recursion, and Care.com, among others.

Fraud Detection

Kafka's role in fraud detection and prevention is paramount in modern anti-fraud management systems. By employing real-time data processing, Kafka helps to timely detect and respond to fraudulent activities, avoiding revenue loss and enhancing customer experience.

Traditional approaches that rely on batch processing and analyzing data at rest are no longer effective in fraud prevention. Kafka's data pipeline capabilities, including Kafka Streams, KSQL, and integration with machine learning frameworks, allow you to analyze data in motion and identify anomalies and patterns indicative of fraud in real-time.

Here we have some real-world deployments in different industries:

- Paypal utilizes Kafka on transactional data for fraud detection.

- ING Bank has implemented real-time fraud detection using Kafka, Flink, and embedded analytic models.

- Kakao Games, a South Korean gaming company, employs a data streaming pipeline and Kafka platform to detect and manage anomalies across 300+ patterns.

- Capital One leverages stream processing to prevent an average of $150 of fraud per customer annually by detecting and preventing personally identifiable information violations in real-time data streams.

Supply Chain Management

Supply Chain Management (SCM) plays a crucial role in optimizing the flow of goods and services that involves various interconnected processes. To address the challenges faced by supply chains in today's modern businesses, Apache Kafka provides valuable solutions.

Kafka provides global scalability, cloud-native architecture, and data integration capabilities, using its real-time analytics. By utilizing Kafka's features, organizations can overcome challenges such as

- Rapid change

- Lack of visibility

- Outdated models

- Shorter time frames

- Integration of diverse tech

Let's see some real-world cases of Kafka data pipeline solutions in the supply chain.

- BMW has developed a Kafka-based robust NLP service framework that enables smart information extraction.

- Walmart has successfully implemented a real-time inventory system by using Kafka data pipeline services.

- Porsche is also using Kafka for data streams for warranty and sales, manufacturing and supply chain, connected vehicles, and charging stations

Modern Automotive Industry & Challenges

The modern automotive industry is all about agility, innovation, and pushing the boundaries of what's possible. Hundreds of robots generate an enormous amount of data at every step of the manufacturing process. Kafka's data pipelines provide the fuel that powers the advancements.

Kafka's lightning-fast data pipelines enable manufacturers to monitor and optimize their production processes like never before. Real-time data from sensors, machines, and systems are streamed into Kafka, providing invaluable insights into manufacturing operations. With Kafka, manufacturers can detect anomalies and bottlenecks immediately. They can identify and resolve issues before they become major problems.

But it doesn't end there. Kafka's real-time data streaming capabilities also extend beyond the factory floor and into the vehicles themselves. By using Kafka's data pipelines, automakers gather a wealth of information and turn it into actionable insights. They can analyze driving patterns to improve fuel efficiency, enhance safety features, and even create personalized user experiences.

Kafka’s Advantages, Without The Engineering

As you can see, there’s a reason Kafka is one of the industry’s favorite foundations for real-time data pipelines. But there’s also a problem: all the components of a Kafka data pipeline we discussed above are notoriously hard to engineer, putting this solution out of reach for all but the most robustly staffed and specialized engineering teams.

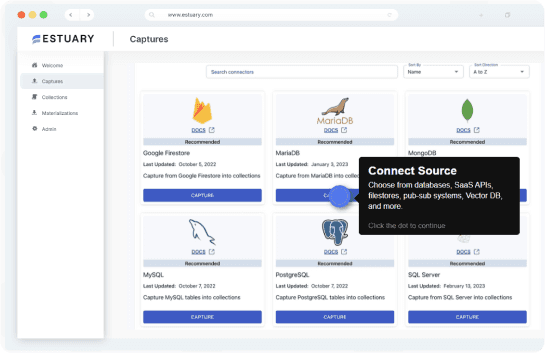

Fortunately, managed real-time data platforms are available today that offer all the advantages of Kafka contained in an easy-to-use dashboard. How? Simply put, by automating the infrastructure management of a message broker like Kafka, so all you have to do is plug in your other data systems.

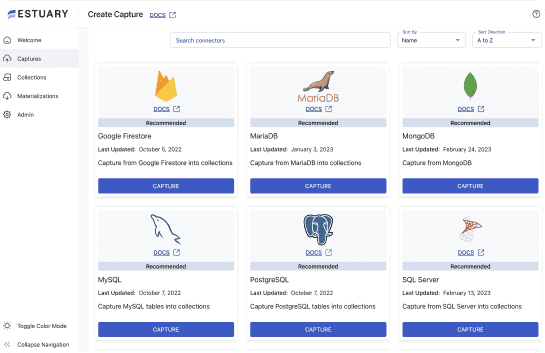

Our real-time data integration platform, Estuary Flow, is one of these platforms. It is a fully managed service built on an event broker similar to Kafka. When you use Flow, you get scalable, tolerant real-time streaming without managing any infrastructure.

Estuary Flow provides built-in connectors for a wide range of source and destination systems (the equivalent of producers and consumers) so you can get up and running quickly. It has a powerful and flexible data transformation engine that transforms your data in any way you need before it's stored in your final destination. Estuary Flow is a highly scalable and reliable platform that can handle even the most demanding data pipelines.

Here are some specific examples of how Estuary Flow can help you achieve the same outcome as a Kafka data pipeline — with some added benefits:

- Already have Kafka deployed, but struggling to integrate it to a particular system? You can use Estuary Flow to connect Kafka to a database and load data into the database in real time. This can be used to create a real-time analytics dashboard or to feed data into a machine-learning model.

- Estuary Flow transforms and filters data as it flows through the pipeline. You can use it to clean up data, remove duplicates, or convert data into a different format.

- Use Estuary Flow to load data into a cloud storage service, such as Amazon S3 or Google Cloud Storage, or a data warehouse, like Snowflake or BigQuery. This powerful tool allows you to effortlessly archive data or make it instantly readily available for analysis.

- You can use Estuary Flow to monitor and troubleshoot your data pipeline. This helps identify problems and ensure that your data is flowing smoothly.

Conclusion

Kafka data pipelines are extremely pivotal in today's data-driven world and understanding their importance is crucial for organizations looking to stay ahead in the competitive landscape. It is an innovative and scalable architecture that enables seamless and real-time data processing. By using a distributed messaging system like Apache Kafka, you can achieve high-throughput, fault-tolerant, and reliable data ingestion, transformation, and delivery.

However, Kafka data pipelines are challenging to set up and manage. But other managed systems built on distributed messaging systems just like Kafka can offer the same advantages with less work.

By using Estuary Flow, you can get robust, real-time streaming pipelines set up in minutes. You’ll enhance your business's data analytics capabilities and unlock valuable insights in real time. Take the next step today by signing up for Estuary Flow or reach out to us directly to discuss how we can assist you.