How to prevent your data warehouse from becoming a data swamp

Your data storage system might be a data swamp masquerading as a data warehouse. Here’s how to tell, and what to do.

A data warehouse is the central pillar of the modern data stack. And even if you’ve grown leery of the promises of the MDS — as many have — there’s no getting around the fact that a warehouse is a necessary part of most organizations’ data strategy.

Simply put, if your organization needs data analytics, it needs a data warehouse.

The problem is, it’s possible that you think you have a warehouse, but you don’t. Not really.

It’s all too easy to get misled by how storage platforms are marketed, or the simple positioning of a data system in the center of the stack. But neither of these things make your storage a proper data warehouse.

It may in fact be what we lovingly refer to as a data swamp: a big ol’ mess of data that makes your data opaque, your analytics frustrating, and your whole data stack seemingly ineffective.

Fortunately, it’s not too hard to figure out if you really have a data warehouse, or if it’s a data swamp. You simply need to shift your criteria.

What is a data swamp, anyway?

The term data swamp is a play on data lake.

Databricks defines a data lake as “a central location that holds a large amount of data in its native, raw format.” This sort of data storage can quickly become a disorganized dumping ground full of data that is forgotten, undiscoverable, messy, or otherwise unusable.

Hence, a data swamp.

We’ve wisely learned to treat data lakes with an abundance of caution. But what does a data swamp have to do with a data warehouse? Isn’t that a mix-up of analogies?

It would be, if all data warehouses were what they claim to be.

Because of its association with the modern data stack and other buzzy data architecture trends, it looks good to call your data repository a “warehouse.” Not many vendors are selling anything under the label “data lake.” No architecture team aspires to set up a storage system that is anything other than well-controlled and organized.

The result? Data storage systems are constantly being mislabeled, causing widespread confusion.

What really defines a data warehouse?

A data warehouse is a data repository designed to power analytics, and, in turn, business goals.

It really is that simple — and that complex. Like many concepts in data, there’s no formalized definition, but there is a general consensus that a warehouse is defined by its ability to effectively help people use data to meet business needs.

Here are a couple other ways of putting it:

- “A data warehouse is a large collection of business data used to help an organization make decisions.” – Talend

- “A data warehouse is a type of data management system that is designed to enable and support business intelligence (BI) activities, especially analytics. Data warehouses are solely intended to perform queries and analysis and often contain large amounts of historical data.” – Oracle

- “A data warehouse is a storage architecture designed to hold data extracted from transaction systems, operational data stores and external sources. The warehouse then combines that data in an aggregate, summary form suitable for enterprise-wide data analysis and reporting for predefined business needs.” – Gartner

This performance-focused definition leads us to a lot of implications, which we’ll touch on shortly.

Data warehouses: more than just OLAP

Today, we hear “data warehouse” and we think: Snowflake! BigQuery! Redshift! But these just so happen to be the types of products best positioned to help us succeed in the actual task of warehousing data in today’s (cloud-based) landscape.

The concept of a data warehouse has existed for years — well before the massive shift to the cloud. But it was cloud technology that allowed the concept to be expressed to its full extent.

Snowflake, BigQuery, and Redshift are all examples of cloud OLAP databases. That is, they are designed to handle analytical workloads by optimizing data for querying rather than transactions.

Today, an OLAP database in the cloud is pretty much a required foundation for an effective data warehouse. But you can’t just go buy one from a vendor and expect a fully-formed data warehouse.

By definition, your data warehouse must be structured to meet your business’s analytical needs. Because every organization has different data and different needs, there’s no such thing as a pre-packaged warehouse.

So, if you’ve purchased a cloud “warehouse” from a vendor, that’s actually just step one. Now, you have to define your strategy and architecture, or it can still become a swamp.

Is it a data warehouse or a data swamp?

Here’s how you actually tell if you’ve got a data warehouse or a data swamp: start with the business needs and work backward.

The ultimate goal of a data warehouse is to provide transparent, orderly, timely data for analytical and BI use cases.

If you’re a data consumer or analyst, you should be able to easily access, query, and operationalize data in the warehouse with the confidence that it has already been cleaned and meets a defined quality standard.

The warehouse should give both human users and BI tools easy access to the data they need to meet specific business outcomes. The query logic needed to consume this data should be relatively straightforward.

On the other hand, you know you have a data swamp if:

- You have multiple, de-centralized “sources of truth” for data analytics.

- Or, there’s one centralized location, but raw data is indiscriminately dumped there.

- You use many on-the-fly materialized views to bridge the gap between stored data and data in the shape that you actually need.

- The warehouse was architected without data consumer input regarding business outcomes.

- Data in the “warehouse” isn’t useable in your BI tool (you may think it’s the BI tool’s fault) or by analysts (who find themselves hacking together their own environments).

- Analysts are expected to use heavy query logic regularly to answer routine questions.

How to architect a data warehouse

If any of the warning signs listed above sound familiar, you might have a data swamp on your hands, or be headed in that direction.

Going from a data swamp to a functioning data warehouse can be a challenging process, but it’ll pay dividends down the line. Whether you’re starting from scratch or trying to fix the infrastructure you currently have in place, there are a few specific things you should focus on.

Start with your stakeholders

Your first step is to start a dialog between the technical team and the business stakeholders, or data consumers. This should come before modeling.

Data consumers bring a broad spectrum of use cases and technical abilities. Some may require clean, unmodified data that they can use to perform in-depth analysis. Others care most that the data is ready to be consumed by their BI tools.

In a given organization, there will be multiple cohorts of stakeholders with different interests. The warehouse must provide data to meet all these needs.

This is what makes your process unique, and is why a full data warehouse solution can’t just be bought from a vendor.

Use data warehouse modeling

With this in mind, you can model the warehouse. What you’re doing here is taking advantage of the multi-dimensional modeling capabilities of OLAP storage in a way that is unique to your organization’s data and business goals.

Data modeling is an entire discipline unto itself, and there are many ways to approach it, especially in the modern cloud environment. Quality vendors should provide guidance and documentation specific to their platform. For the sake of illustration in this article, we’ll discuss dimensional modeling using a simple star schema.

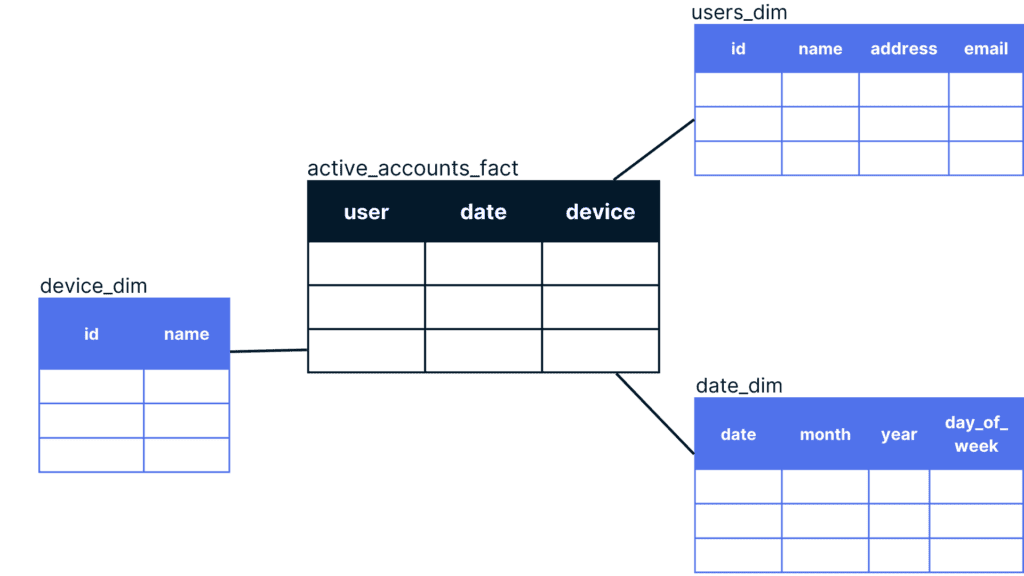

Star schemas classify data into fact tables and dimension tables, where the fact table stores observations and the dimension tables store associated descriptive details. The fact table is the center point of the star, and is joined to various dimension tables through defined keys.

Again, you should be meeting with your stakeholders to build the schema around business needs. For example:

- For a given fact (say, user activity), what details — or dimensions — are required to drive each business outcome?

- What grain, or level of detail, should be contained in each row for the data to be useful?

Then, you’ll want to factor in performance considerations. In the case of the star schema, each join within the warehouse can be expensive. So you might investigate whether some data can be pre-joined prior to landing in the warehouse.

Other types of architectures — for example, adding normalization or using the hybrid data vault approach — will have their own sets of unique considerations.

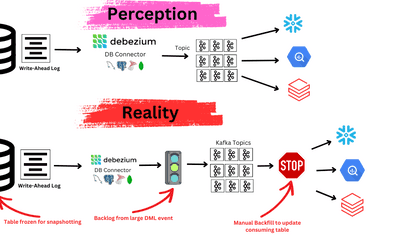

Ingest data intelligently

Another hallmark of a data swamp is unclean, unstructured, and sometimes inaccurate data.

To prevent this from happening to your warehouse, take advantage of the data ingestion process. You can use the tools that capture data to clean it up and organize it before it lands in your warehouse.

This goes beyond basic ELT pipelining. You’ll want to consider data schemas, report errors, and curate data during ingestion. For more on this topic, check my other post, Why ELT won’t fix your data problems.

Quality control of incoming data is more challenging than ever before. Gone are the days when most of the data coming to a data warehouse came from another database with an easily inferred schema. Today, we often encounter event-based data, SaaS, and API sources. Unstructured and semi-structured data is also becoming more common — social media data, for instance, can’t be so neatly defined by a schema.

This was the initial appeal of data lakes: to allow all these types of data into storage without excessive modification. However, as we’ve seen, this comes at a price.

To strike a balance, you need a combination of strategy and tools.

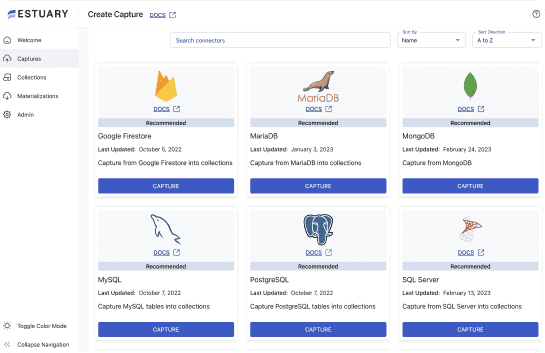

At Estuary, we’ve created our DataOps platform, Flow, with that in mind. Flow uses JSON schema to standardize and enforce quality standards for different collections of data from a wide variety of sources.

Data ingestion in Flow features other tools that can help you prevent swampy data. They include the options to add intelligent transformations, pre-join, or select a subset of data before it arrives in the warehouse.

Iterate

Your schema should be designed to facilitate your most common or important queries. These will undoubtedly change, as will the data in your warehouse. So, don’t expect your architecture to be static, either. Design with flexibility in mind.

It helps to integrate the principles of DataOps into warehouse management. Apply as much automation as possible for deployments and testing, and build in a short life cycle to account for the ongoing development of the model.

This goes for the broader view of your strategy, as well. Don’t worry about whether your warehouse is part of a “modern data stack,” or whatever is currently in vogue. Focus on flexibility and governance, and never lose sight of the use cases your data serves.

You can find the Estuary team — and more articles like this — by following us on LinkedIn. Our code is on GitHub.

Want to try Flow? Sign up for a free trial in the web app.