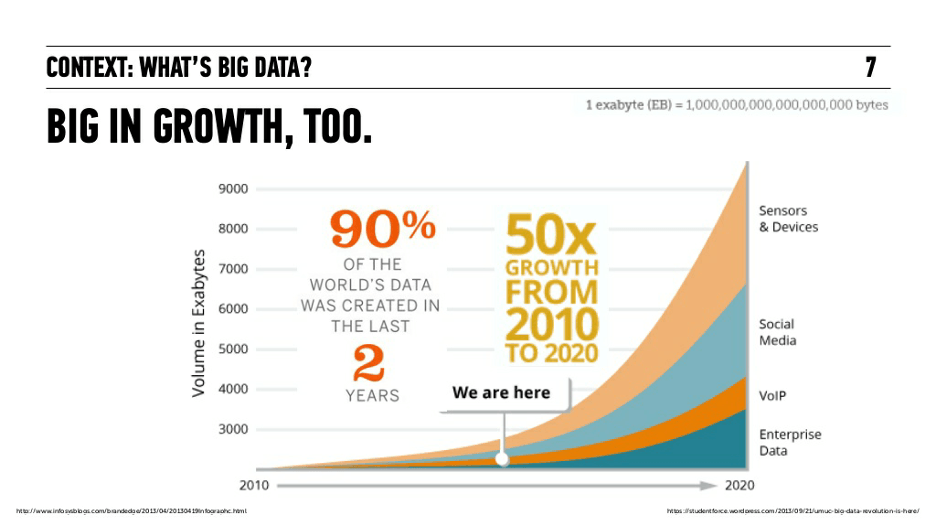

The amount of data that’s being produced today is 120 zettabytes. Let’s put that in perspective.

That is equivalent to the volume of the Great Wall of China if you allow an 11oz cup of coffee to stand for a gigabyte of data. Times 120!

Next year, it’ll be 147 zettabytes. According to a survey, close to 99.5% of this data goes unused. It might be attributed to the fact that most of the data here are raw and scattered, thus holding no importance.

This means there’s a pressing need to collect the data and combine them in a single repository to extract meaningful, actionable insights from them.

That’s where ETL comes in.

Understanding ETL Batch Processing

Simply put, batch processing refers to data processing in batches over a specific timeframe. The batch method lets users process data as and when computing resources are available.

In batch processing, users will store the data, and process it during an event called the ‘batch window’.

Batch ETL processing is the seasoned veteran, while stream ETL processing or real-time ETL processing is the new kid on the block.

In the streaming model, data is analyzed piece-by-piece in real-time (near-real-time to be accurate.)

What is ETL Batch Processing?

In ETL Batch processing, the data is available for analysis after an hour or a few days. You can run Batch ETL on a specified schedule, for instance, 24 hours. You can even set the system to run batch ETL once the data crosses a certain limit.

The basic parameters for efficient ETL batch processing are as follows:

- Where are the inputs and outputs?

- Who’s submitting the job?

- What program are you running?

- When will you run the job?

Here’s a real-world example of where batch ETL might come in handy.

Consider a fast-food restaurant.

A little less sketchy, perhaps.

That’s better.

So Burger King might perform batch ETL once each restaurant has closed for the night to calculate the daily revenue for every location.

Streaming ETL would be suboptimal here since you don’t need to constantly monitor a restaurant’s revenue for every minute of the day.

Purpose of Building an ETL Pipeline with Batch Processing

By leveraging batch ETL processing, you can improve efficiency by setting processing priorities. Batch processing also allows you to finish data jobs at a time that makes the most sense.

Therefore, you should use ETL batch processing in the following scenarios:

- When real-time data analysis isn’t critical.

- While joining tables in relational databases.

- When you have an external dependency that’s batched in nature.

- When the analysis accesses the entire batch, for instance, sorting the entire dataset.

Another scenario where batch processing plays a pivotal role is dabbling with data sources that are legacy systems.

Batch ETL processing is also preferred to analyze data generated on mainframes. Accessing and integrating mainframe data into modern analytics environments is tedious.

Advantages and Disadvantages of Batch Processing for ETL

Given that batch processing has been around for a while, it has its set of pros and cons. You’ll need to weigh the tradeoffs and use your business requirements to know if batch ETL is the right method for you. Let’s start with the strong points:

- Batch processing is simpler to implement than stream processing. It doesn’t need the hardware or system support stream processing needs for proper functioning. On top of this, a batch processing system would need lesser maintenance than stream processing, once you’ve built it.

- A lot of legacy systems aren’t compatible with streaming ETL. So, if legacy technology is a part of your workflow, you’ll need to employ batch processing as a part of your ETL pipeline.

- Since batch processing automates a large chunk of processing jobs, while minimizing user interaction, the scope of human error goes down. Accuracy and precision are improved to produce a higher degree of data quality.

Here are a few reasons why the focus has shifted from batch-processing ETL to streaming ETL recently:

- If the system crashes in the final stages of batch ETL processing, the entire data set would fail.

- As the volume of data increases, so does the processing time. Adding to your woes, this might overlap with the next scheduled runtime, increasing the chances of erroneous ingestion.

- Batch ETL is recommended for organizations that use repetitive operations. But if a new data type enters the fold, the system will fail to recognize it, causing inaccuracy.

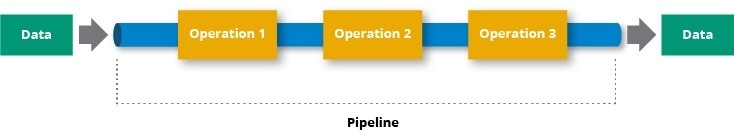

Understanding ETL

ETL, or Extract-Transform-Load, is a process that extracts raw data from multiple disparate sources, transforms the data (cleans the data to remove duplicates and converts them to a standard format), and loads it to a central repository to be accessed by all the stakeholders to simplify analysis.

Data is required by every team today to guide the decision-making process, irrespective of whether you belong to the customer support team or the marketing team. ETL allows every department to be equipped with the right set of information to help improve business processes, and in turn boost the bottom line for their enterprises.

Here are a few real-world instances where ETL might come in handy:

- Medical Data Analysis: Traditional data warehouses don’t support unstructured or semi-structured data. This makes it difficult to properly use text data sets for healthcare systems. This is where ETL can come in. By using advanced semantics, ETL can distinguish between variations in medical terms, improving the quality of data analysis for healthcare systems.

- Marketing Campaigns: If retailers want to develop a marketing campaign for a new product line, they’ll need a clear understanding of the target market. This’ll require dipping into customer-related data in various additional databases and demographic information about customers in a CRM. To simplify their workload, retailers can use ETL to build a single source of truth for BI tools and customer data to discover which consumer segments will most likely be interested in its new product range.

- Financial Reporting and Analysis: You’d need to look at quarterly reports, determine the ROI, and compare your performance with your competitors to get a pulse on the financial stability of your business. The data would be present over a large number of sources, and manual entry of all these data points introduces a high chance of error. You can reduce the chance of error and automate this process with ETL methods, allowing your business to make better financial decisions.

Building an ETL Pipeline with Batch Processing

Every batch in this process contains a large volume of data. Before loading the data into the target warehouse, batch ETL will transform the collated data through a method that aligns with your enterprise requirements. Let’s dive into the steps:

Steps to Building an ETL Pipeline with Batch Processing

Here are the steps involved in building an ETL pipeline with Batch Processing:

- Step 1: First, create reference data. This data contains permissible values or static references that your data might include. You might need this when transforming your data from the source to the target.

- Step 2: To set up a connection and extract the data from the source, you either need the tools that create a connection between the source and the target or see if a third-party tool supports the connectors you want. The data’s file format could be anything from RDBMS, CSV, and JSON to XML.

- Step 3: Time to validate. Once you’ve extracted the data, check to see if it’s in the expected range. If it’s not, you’ll have to reject it. For instance, if you need to extract data for the past week, you’ll have to reject any data that’s older than a week.

- Step 4: After validation, comes transformation. The common transformations include cleaning, deduplication of data, business rule application, standardization, aggregations, data integrity check, and much more.

- Step 5: All the transformed data needs to be stored somewhere before you move it to a central repository. That’s where a staging area comes in. The staging layer allows you to roll back the data easily if anything goes wrong. It’ll also create audit reports for diagnosis, analysis, or regulatory compliance.

- Step 6: Once the data is available in the staging layer, the next phase is moving the data to the destination. You can either overwrite the existing information or append the data whenever your ETL pipeline loads up a new batch.

- Step 7: This is the most pivotal part of automating an ETL pipeline. Pick a schedule to load weekly, monthly, daily, or a custom range that suits your business needs. The data loaded with the schedules can have a timestamp to identify the load date. Task dependencies and scheduling need to be carefully executed to avoid performance and memory issues.

Best Practices for ETL Pipeline with Batch Processing

Here are a few best practices to keep in mind when building an ETL pipeline with batch processing:

- Realllly Know Your Data: Before running an ETL pipeline with batch processing, it is important to understand your data. Analyze the data sources, the values, the format, and the size of the data. This will help you decide the best approach for running an ETL pipeline with batch processing.

- Automate What You Can ➡ $$$: Automating parts of the ETL pipeline can help to optimize the process and reduce manual effort. It can also help to make sure that the jobs are running in a timely manner and that data is being loaded efficiently.

- Plan Ahead: It is important to plan ahead when running an ETL pipeline with batch processing. Consider the data load size, the speed at which the data needs to be processed, and the number of individual jobs that will need to be run. This will help you to create an efficient batch-processing pipeline.

- Test. Validate. Repeat: It is important to test and validate your ETL pipeline regularly. Test with real-world data and edge cases to discover and correct any potential errors before deploying the pipeline. This will ensure correct data loading and job execution.

- Keep a Vigilant Eye on Your Resources: Monitor the resources used by the batch processing pipeline regularly. This will help you identify any potential bottlenecks in the system and take corrective action to ensure that the pipeline is running smoothly. Set up alerts and notifications to quickly detect and resolve any issues that may arise during batch processing.

- Guard Your Data. Fiercely.: Securing your data may involve encrypting data, using robust authentication/ authorization mechanisms, and executing data masking or anonymization techniques to protect sensitive data. Better safe than sorry, pal.

Choosing an ETL Tool

The global data pipeline tools market was valued at $7.1 billion in 2021 and is expected to expand at a CAGR of 24.5% from 2022-2030. The primary factors that drove the increased adoption of data pipeline tools were:

- Increased investments in advanced technologies.

- Rise in the adoption of the Internet of Things (IoT) and artificial intelligence.

- Need for reduced data latency.

Of this market, ETL data pipeline tools enjoyed a lion-share of the revenue (39.5%) back in 2021, and it’s only been ramping up since. Let’s look at a few factors you can use to pick an ETL tool suited to your needs:

- Support for Batch and Stream Data Ingestion: We’ve talked about use cases where batch processing is the ideal candidate. Similarly, there are situations where stream data ingestion is the clear winner. For an enterprise like yours, a healthy mix of the two is recommended. Therefore, you should look for a tool that supports both batch and stream data ingestion based on your needs.

- Ease of Use: ETL tools need to be easy to use since that would allow people other than seasoned data engineers to take them for a whirl too. Therefore, when looking for an ETL tool for your workflow, factor in how easy is it to set up a new ETL process with that tool or modify an existing one.

- Pricing: There are various pricing models used by ETL tools these days. It could be consumption-based, the number of data sources used, or the number of users using the software. Pick one that’ll let you try it out for free, provide free historical loads, and replicate data from new data sources.

- Maintenance: Once you have your ETL tool up and running, the next pertinent questions to ask should be about maintenance. Will you need a lot of technical know-how to keep your ETL pipeline running without hitches? If one of your data sources is facing problems, what’s the turnaround time for discovering the root cause and fixing it? Keep these questions in mind when evaluating ETL tools.

Here are a couple of ETL tool providers that have made a splash in the market:

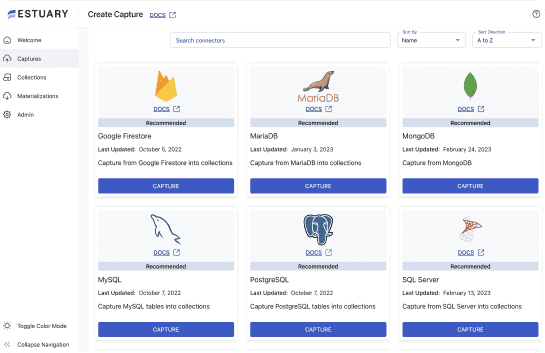

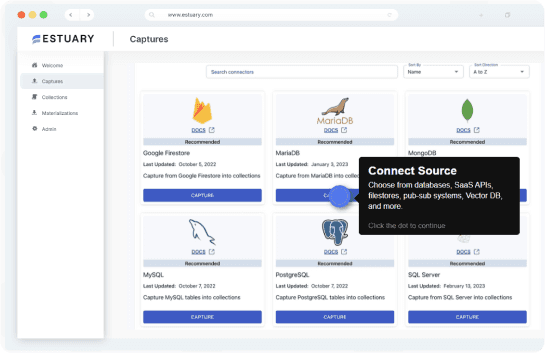

- Estuary: Estuary offers managed CDC and ETL pipelines with streaming SQL transforms. Built for data architects, engineers, and analysts; it also supports the Airbyte protocol for batch-based connectors. Give Estuary Flow a try for free with unparalleled hands-on support!

- Airbyte: Airbyte provides a great solution for data engineers who prefer open-source solutions and need flexibility in data integration processes, thanks to its ease of deployment, extensibility, and data reliability.

- Fivetran: Fivetran is a cloud-based ETL tool that specializes in automated data integration. Fivetran is known for its simple setup, fast replication of data, and automated schema management making it an excellent choice for data engineers looking for a hassle-free, automated ETL solution.

Conclusion

The recent data explosion has made the traditional ETL process for data-driven organizations a liability. This is because they need continuous data integration for real-time insights. Enterprise businesses are replacing or supplementing traditional ETL with a cloud-based data pipeline that offers real-time insights a.k.a. data modernization.

But even in a rapidly changing data landscape, batch processing hasn’t lost its sheen and continues to be a pivotal part of various operations as we discussed in this article. Along with that, we went over the steps and best practices you can keep in mind when building an ETL pipeline with batch processing to get you started.

We’ll leave you with this: know your requirements — a hybrid between batch and stream processing will serve you best, and get cookin! Because the real challenge isn’t choosing between the two, it’s knowing the right mix of both to boost your bottom line today.